I've been wondering how interdependent the different parts of the uptake machinery are.

We have long known that some H. influenzae mutants (knockouts of rec2 or comF) could take up DNA but not translocate it into the cytoplasm. They take up about the same amounts of DNA as do wildtype cells, but the DNA stays intact (double-stranded, not degraded). Because this DNA doesn't get cut by the nucleases we know to be active in the cytoplasm, the DNA must be accumulating in the periplasm. This means that the uptake machinery can continue to operate in the absence of translocation.

What about ComE1? This H. influenzae protein is a homolog of the well-characterized B. subtilis protein ComEA. In B. subtilis, ComEA is thought to sit on the periplasmic side of the membrane, under the thick cell wall (there is no outer membrane). The pseudopilus machinery (specified by the ComG proteins) passes DNA across the cell wall to ComEA, and ComEA passes the DNA to the membrane transport machinery (homologs of Rec2 and ComF).

ComEA is essential for DNA uptake in B. subtilis, and its homologs are essential in other competent bacteria. But the H. influenzae homolog, ComE1,is not essential - knockouts reduce transformation by only about 10-fold, and reduce uptake by only about 7-fold. Nevertheless, let's assume that ComE1 does more or less the same thing that ComEA does - accepts DNA from the pseudopilus and passes the DNA on to the machinery that moves it across the inner membrane.

Here's the question: Can the pseudopilus keep doing its job (reeling DNA in) even if ComE1 isn't passing the DNA on? Put another way, do mutations in comE1 reduce DNA uptake, or are they like mutations in rec2 and comF? I already told you the answer - a comE1 knockout reduces DNA uptake as well as transformation. We've known this for several years, but I only now put it into the context of the newer B. subtilis results.

So this means that my model of DNA uptake has to include ComE1 accepting the DNA from the pseudopilus and passing it on to Rec2 and ComF if they're available, or letting the DNA pile up in the periplasm if they're not. If ComE1 isn't there, the pseudopilus stalls. The residual transformation we see in cells lacking ComE1 probably means that about 10% of the DNA finds its way from the pseudopilus to the translocation machinery even in the absence of ComE1, and that this machinery can transport DNA that hasn't been handled by ComE1.

This interpretation makes a prediction about an experiment we've already done - that the reduced DNA uptake seen in comE1 mutants is still USS-dependent. And that's what we see. There's another prediction, that comE1 mutants should not be defective in the initiation of uptake (passing the initial loop through the pore) but only in the subsequent reeling in of the DNA by the pseudopilus. Perhaps we can test this using laser-tweezers, or by cross-linking analysis. From this perspective the comE1 mutant may be very useful in helping us dissect steps of uptake, as it would cause uptake to stall or slow.

This is all very satisfying. We've had the comE1 data for a long time, and tried to write it up, but haven't published it because it didn't seem to explain anything. I think our confusion arose from trying to distinguish between 'binding' and 'uptake' of DNA, whereas now I'm distinguishing between the 'initiation' and 'continuation' of uptake. Now we have a framework for these results, they will make a nice little paper. Unfortunately the technician and M.Sc. student who did the work are long gone so they can't help rewrite the draft, and some of their experiments should be repeated and expanded a bit. Luckily one of the present post-docs is doing similar experiments on other strains, and it would probably be quite simple for her to do the needed experiments and help finish the paper (on which she would then be first author).

Not your typical science blog, but an 'open science' research blog. Watch me fumbling my way towards understanding how and why bacteria take up DNA, and getting distracted by other cool questions.

Sticky tip?

I'm deep into the literature on type four pili. (They're variously abbreviated as Tfp or T4P - I'm going to go with Tfp* because a Google search for Tfp pilin finds over 10,000 hits, whereas a search for T4P pilin finds only 273.)

I was thinking that the USS-specific interaction between pilin subunits and the DNA sequence flanking the core USS would be mediated by the positively charged regions of the pilin that are exposed on the sides of the pilus, but it could instead be a region that is exposed only at the pilus tip.

When Tfp cause adhesion or twitching motility by sticking to surfaces, it's the tip of the pilus that does the sticking. Because of the way the subunits assemble, the top and part of the sides of each non-tip subunit are covered by the subunits above them, but the tops and parts of the sides of the subunits at the tip are exposed, and can interact with their environment.

If the side of the pilus was interacting with the USS outside of the pore, when the pilus retracted the pore would need to accommodate the pilus plus the two sides of the DNA loop. But the pores that have been studied aren't big enough for this; they are about 6-7nm across when open, and can fit a pilus (5-5nm), or two filaments of double-stranded DNA (2nm each), but not them all, and maybe not even the pilus plus one filament of DNA.

Thus continuing uptake may require that the pilus stay in the periplasm, and reel the DNA in through the pore (as I drew it in this post). But when uptake is being initiated the pilus must protrude through the pore to form the initial attachment to the DNA. Pulling the DNA in through the pore would be a lot easier if the DNA was attached to the pilus tip rather than to its side.

I have an initiation figure that shows the DNA binding to the side of the pilus; I'll modify it and add it to this post later.

* Except of course when corresponding with someone who prefers T4P, such as the author of a recent helpful review.

I was thinking that the USS-specific interaction between pilin subunits and the DNA sequence flanking the core USS would be mediated by the positively charged regions of the pilin that are exposed on the sides of the pilus, but it could instead be a region that is exposed only at the pilus tip.

When Tfp cause adhesion or twitching motility by sticking to surfaces, it's the tip of the pilus that does the sticking. Because of the way the subunits assemble, the top and part of the sides of each non-tip subunit are covered by the subunits above them, but the tops and parts of the sides of the subunits at the tip are exposed, and can interact with their environment.

If the side of the pilus was interacting with the USS outside of the pore, when the pilus retracted the pore would need to accommodate the pilus plus the two sides of the DNA loop. But the pores that have been studied aren't big enough for this; they are about 6-7nm across when open, and can fit a pilus (5-5nm), or two filaments of double-stranded DNA (2nm each), but not them all, and maybe not even the pilus plus one filament of DNA.

Thus continuing uptake may require that the pilus stay in the periplasm, and reel the DNA in through the pore (as I drew it in this post). But when uptake is being initiated the pilus must protrude through the pore to form the initial attachment to the DNA. Pulling the DNA in through the pore would be a lot easier if the DNA was attached to the pilus tip rather than to its side.

I have an initiation figure that shows the DNA binding to the side of the pilus; I'll modify it and add it to this post later.

* Except of course when corresponding with someone who prefers T4P, such as the author of a recent helpful review.

The proposal is finally taking shape

My research proposal finally is reading more like a narrative and less like a disjointed string of ideas.

One experiment that I'll be looking for advice on is to cross-link transforming DNA to the proteins that are taking it up, re-extract the DNA with its attached proteins, undo the cross-links, and identify the proteins.

I also have the name of someone to consult with about the cross-linking. Given the limited number of uptake events we can have, we'll want the cross-linking to be as efficient as possible.

One experiment that I'll be looking for advice on is to cross-link transforming DNA to the proteins that are taking it up, re-extract the DNA with its attached proteins, undo the cross-links, and identify the proteins.

- The DNA will be our USS-1 fragment, about 200bp with a perfect USS in the middle. It will have a biotin molecule on each end.

- The 'taking up' could be done by cells, but we'll get cleaner results if we can start with a preparation of cell membranes, or blebs ('transformasomes') or pili, i.e. material enriched for the uptake machinery but unable to translocate DNA across the inner membrane.

- The cross-linking will probably be done with the chemical formaldehyde, because these cross-links can easily be undone (though I don't know how).

- Re-extraction of the DNA plus any cross-linked proteins will use the biotin tags, by mixing everything with agarose beads covered with the biotin-binding protein streptavidin. We can easily then separate the beads, with their attached DNA with its cross-linked proteins, from everything that isn't cross-linked to the DNA.

- Then the cross-links will be undone and the DNA digested away with DNase I. (Maybe only one of these steps is needed?)

- Then the protein mixture will be examined by a mass spectrometry technique called MALDI-TOF, which separates the proteins by their size. MALDI-TOF gives very precise size measurements, and these may be sufficient to let us identify specific proteins.

- Identifications can be checked by repeating the cross-linking analysis with mutant cells lacking known proteins.

I also have the name of someone to consult with about the cross-linking. Given the limited number of uptake events we can have, we'll want the cross-linking to be as efficient as possible.

Rachael's boyfriend's plasmid, and rifampicin

I ran into a colleague at the coffee pot today, and asked his expert advice about ways to investigate whether RNA polymerase pauses or stalls when transcribing the sxy gene.

He said that pausing is quite easy to show using a commercially-available E. coli in vitro system (as I had hoped it would be), but that showing that a H. influenzae sequence causes pausing in this system would only be significant if we first showed that the H. influenzae sxy gene was regulated in vivo in E. coli as it is in H. influenzae. The alternative is to use a H. influenzae in vitro system, but we would have to purify the components ourselves, which is well beyond both our abilities and our real interests.

We might be able to show the regulation in E. coli, if the sxy gene wasn't so toxic to E. coli (see Making lemonade). Well, we could perhaps work with a truncated gene, subject to the same transcriptional controls but not producing Sxy protein... Hey! In fact, one of the very first sxy plasmids I made would be just the thing! The plasmid is named pDBJ90 (the name I think is the initials and year of the boyfriend of the student who made it); it contains only the 5' half of the sxy coding region but all of the upstream sequences that affect its transcription. And it's in a high-copy vector. And as far as I know the insert is stable, though we've learned that we should always check the sequence. I don't know whether our Sxy antibody will recognize the truncated protein it should produce.

What would the experiment be? Grow the E. coli cells with the plasmid in minimal medium with added purines and pyrimidines. Add cAMP to induce the sxy promoter, and transfer half the culture to the same medium with no purines or pyrimidines. Measure the amounts of sxy mRNA and protein at several time points for each half of the culture. If the ratio of protein to RNA is higher when the purines and pyrimidines are absent, then we can do the in vitro experiment.

The colleague also suggested an entirely new way to look at the relationship between sxy transcription and sxy translation. He reminded me that mutations in the genes for RNA polymerase can make the polymerase resistant to the antibiotic rifampicin, and told me that these mutations can affect the efficiency of transcription in ways that might change sxy expression. So here's the experiment plan:

Starting with wildtype (rifS) H. influenzae cells, select cells that are resistant to rifampicin. I think these are quite easy to select; we could try several different rifampicin concentrations. Pool all the colonies that grow up on rifampicin plates, and select hypercompetent ones by transforming the pooled cells with novR DNA while they are in log-phase growth in sBHI. If we get any hypercompetent cells, check whether the hypercompetence is caused by the rifR mutation, by using their DNA to transform fresh cells to rifR. If yes, we've shown that mutations in RNA polymerase can cause hypercompetence.

We do already have one RNA polymerase mutation that affects competence induction. It's an insertion that doesn't change the sequence of RNA polymerase but probably reduces the amount of polymerase in the cell, and it decreases competence rather than increasing it. I can make up a just-so-story that fits this mutant into our current model, but it's just handwaving.

He said that pausing is quite easy to show using a commercially-available E. coli in vitro system (as I had hoped it would be), but that showing that a H. influenzae sequence causes pausing in this system would only be significant if we first showed that the H. influenzae sxy gene was regulated in vivo in E. coli as it is in H. influenzae. The alternative is to use a H. influenzae in vitro system, but we would have to purify the components ourselves, which is well beyond both our abilities and our real interests.

We might be able to show the regulation in E. coli, if the sxy gene wasn't so toxic to E. coli (see Making lemonade). Well, we could perhaps work with a truncated gene, subject to the same transcriptional controls but not producing Sxy protein... Hey! In fact, one of the very first sxy plasmids I made would be just the thing! The plasmid is named pDBJ90 (the name I think is the initials and year of the boyfriend of the student who made it); it contains only the 5' half of the sxy coding region but all of the upstream sequences that affect its transcription. And it's in a high-copy vector. And as far as I know the insert is stable, though we've learned that we should always check the sequence. I don't know whether our Sxy antibody will recognize the truncated protein it should produce.

What would the experiment be? Grow the E. coli cells with the plasmid in minimal medium with added purines and pyrimidines. Add cAMP to induce the sxy promoter, and transfer half the culture to the same medium with no purines or pyrimidines. Measure the amounts of sxy mRNA and protein at several time points for each half of the culture. If the ratio of protein to RNA is higher when the purines and pyrimidines are absent, then we can do the in vitro experiment.

The colleague also suggested an entirely new way to look at the relationship between sxy transcription and sxy translation. He reminded me that mutations in the genes for RNA polymerase can make the polymerase resistant to the antibiotic rifampicin, and told me that these mutations can affect the efficiency of transcription in ways that might change sxy expression. So here's the experiment plan:

Starting with wildtype (rifS) H. influenzae cells, select cells that are resistant to rifampicin. I think these are quite easy to select; we could try several different rifampicin concentrations. Pool all the colonies that grow up on rifampicin plates, and select hypercompetent ones by transforming the pooled cells with novR DNA while they are in log-phase growth in sBHI. If we get any hypercompetent cells, check whether the hypercompetence is caused by the rifR mutation, by using their DNA to transform fresh cells to rifR. If yes, we've shown that mutations in RNA polymerase can cause hypercompetence.

We do already have one RNA polymerase mutation that affects competence induction. It's an insertion that doesn't change the sequence of RNA polymerase but probably reduces the amount of polymerase in the cell, and it decreases competence rather than increasing it. I can make up a just-so-story that fits this mutant into our current model, but it's just handwaving.

Two directions at once?

More about DNA uptake:

Provided there’s enough space in the secretin pore, there’s no reason why the continuing-to-pull mechanism can’t act on both sides of the loop. This is especially true if, once the DNA has gotten started, the pilus doesn’t need to protrude through the pore but can just pull from within the periplasm, leaving the channel of the pore unblocked so DNA can move through it. The pore is easily wide enough for two double-stranded DNAs to pass through, and the non-specific binding between pilus and DNA that must be transmitting the pulling force can act on both DNAs at once, if they’re binding to different sides of the pilus.

Provided there’s enough space in the secretin pore, there’s no reason why the continuing-to-pull mechanism can’t act on both sides of the loop. This is especially true if, once the DNA has gotten started, the pilus doesn’t need to protrude through the pore but can just pull from within the periplasm, leaving the channel of the pore unblocked so DNA can move through it. The pore is easily wide enough for two double-stranded DNAs to pass through, and the non-specific binding between pilus and DNA that must be transmitting the pulling force can act on both DNAs at once, if they’re binding to different sides of the pilus.

Grappling with (and by) type IV pili

Transport of DNA across the outer membrane presents two big problems, neither of which have been well articulated to date. The first is the difficulty of getting started, which requires pulling an initial loop of double stranded DNA across the membrane. The second is the difficulty of continuing to pull a long fiber of DNA into the confined space of the periplasm.

Both are probably explained by the ability of the fibers called type IV pili to bind to DNA and to be pulled into the periplasm. Type IV pili are long narrow threads composed of identical protein subunits (pilins) arranged in a rope-like helical coil (abut 6nm wide and up to 5 µm long). They are assembled in the periplasm by adding subunits to the base, and elongated and retracted by addition and removal of subunits. The pili that pull DNA are really 'pseudopili' because they're so short, but for simplicity I'll refer to both long and short ones as pili.

I've posted before about the getting-started problem (see for example Getting the kinks in), so here I'll try to explain the continuing-to-pull problem, whose importance I only realized a few days ago while discussing DNA uptake with one of the post-docs. Let's assume that a loop of DNA has bound to the pilus and been pulled across the outer membrane into the periplasm. And for now let's assume that we need only consider what's happening to the DNA of one side of the loop, and can ignore what might be happening to the other side of the loop.

The big problem is that retraction of the pilus can only pull the DNA in a short ways, no more than the length of the pilus. As the pili that transport DNA are too short to see even with electron microscopy, we think they must be barely long enough to protrude through the secretin pore, probably less than 20nm long. This is only 5 turns of the pilin helix, and only about 20bp of DNA. So how does the pilus pull in DNA molecules that are at least 10 kb long? Here are figures illustrating two solutions.

The first is compatible with the way long pili cause adhesion and 'twitching motility'. The pilus attaches to DNA, pulls it in a bit by disassembling pilin subunits at the base, pulling the pilus down. It then lets go of the DNA, elongates a bit by adding subunits at the base, and grabs a fresh part of the DNA to pull on. In the figure I've shown the pilus as short enough to not protrude through the secretin pore, leaving the pore free for the DNA. This would limit the length of each pilus 'stroke' to the thickness of the periplasm. Under normal circumstances this would be only about 10nm, but the pilus may push the membranes further apart.

The first is compatible with the way long pili cause adhesion and 'twitching motility'. The pilus attaches to DNA, pulls it in a bit by disassembling pilin subunits at the base, pulling the pilus down. It then lets go of the DNA, elongates a bit by adding subunits at the base, and grabs a fresh part of the DNA to pull on. In the figure I've shown the pilus as short enough to not protrude through the secretin pore, leaving the pore free for the DNA. This would limit the length of each pilus 'stroke' to the thickness of the periplasm. Under normal circumstances this would be only about 10nm, but the pilus may push the membranes further apart.

The second model is more elegant, but requires a new mechanism that we have no direct evidence for. The first two steps are the same; the pilus binds DNA and pulls it in by disassembling subunits at the base of the pilus, which pulls the pilus down. But in this model new subunits are continuously added to the other end of the pilus, so it never gets shorter and continuously binds and pulls new DNA down into the periplasm.

The drawings are more-or-less to scale, but the periplasmic space may be thicker than shown, which would allow the pilus to be longer without obstructing the pore.

The first model is more parsimonious, in that it uses the retraction mechanism that we know works for the long pili that mediate adhesion and twitching motility. Initially I thought that the pilus might have difficulty letting go of the DNA before elongating, but when I drew the model I realized that, once the pilus becomes very short it will be unable to bind DNA and so release will be spontaneous.

Can we design experiments that distinguish between these models? And is this something that our big grant proposal should address? I'll leave the answers for another post.

Both are probably explained by the ability of the fibers called type IV pili to bind to DNA and to be pulled into the periplasm. Type IV pili are long narrow threads composed of identical protein subunits (pilins) arranged in a rope-like helical coil (abut 6nm wide and up to 5 µm long). They are assembled in the periplasm by adding subunits to the base, and elongated and retracted by addition and removal of subunits. The pili that pull DNA are really 'pseudopili' because they're so short, but for simplicity I'll refer to both long and short ones as pili.

I've posted before about the getting-started problem (see for example Getting the kinks in), so here I'll try to explain the continuing-to-pull problem, whose importance I only realized a few days ago while discussing DNA uptake with one of the post-docs. Let's assume that a loop of DNA has bound to the pilus and been pulled across the outer membrane into the periplasm. And for now let's assume that we need only consider what's happening to the DNA of one side of the loop, and can ignore what might be happening to the other side of the loop.

The big problem is that retraction of the pilus can only pull the DNA in a short ways, no more than the length of the pilus. As the pili that transport DNA are too short to see even with electron microscopy, we think they must be barely long enough to protrude through the secretin pore, probably less than 20nm long. This is only 5 turns of the pilin helix, and only about 20bp of DNA. So how does the pilus pull in DNA molecules that are at least 10 kb long? Here are figures illustrating two solutions.

The first is compatible with the way long pili cause adhesion and 'twitching motility'. The pilus attaches to DNA, pulls it in a bit by disassembling pilin subunits at the base, pulling the pilus down. It then lets go of the DNA, elongates a bit by adding subunits at the base, and grabs a fresh part of the DNA to pull on. In the figure I've shown the pilus as short enough to not protrude through the secretin pore, leaving the pore free for the DNA. This would limit the length of each pilus 'stroke' to the thickness of the periplasm. Under normal circumstances this would be only about 10nm, but the pilus may push the membranes further apart.

The first is compatible with the way long pili cause adhesion and 'twitching motility'. The pilus attaches to DNA, pulls it in a bit by disassembling pilin subunits at the base, pulling the pilus down. It then lets go of the DNA, elongates a bit by adding subunits at the base, and grabs a fresh part of the DNA to pull on. In the figure I've shown the pilus as short enough to not protrude through the secretin pore, leaving the pore free for the DNA. This would limit the length of each pilus 'stroke' to the thickness of the periplasm. Under normal circumstances this would be only about 10nm, but the pilus may push the membranes further apart.

The second model is more elegant, but requires a new mechanism that we have no direct evidence for. The first two steps are the same; the pilus binds DNA and pulls it in by disassembling subunits at the base of the pilus, which pulls the pilus down. But in this model new subunits are continuously added to the other end of the pilus, so it never gets shorter and continuously binds and pulls new DNA down into the periplasm.

The drawings are more-or-less to scale, but the periplasmic space may be thicker than shown, which would allow the pilus to be longer without obstructing the pore.

The first model is more parsimonious, in that it uses the retraction mechanism that we know works for the long pili that mediate adhesion and twitching motility. Initially I thought that the pilus might have difficulty letting go of the DNA before elongating, but when I drew the model I realized that, once the pilus becomes very short it will be unable to bind DNA and so release will be spontaneous.

Can we design experiments that distinguish between these models? And is this something that our big grant proposal should address? I'll leave the answers for another post.

First questions first

The other day we sat down together to take our first joint look at the grant proposal I'm writing. The proposal is still rather inchoate (first time I've used THAT word), especially the experiments section, but it's coming along.

Our model for how the secondary structure of sxy mRNA regulates its expression proposes that the speed of progress of RNA polymerase along the gene determines whether the secondary structure forms before ribosomes can bind and start translating. So I was proposing to directly test whether RNA polymerase pauses or stalls, especially when nucleotides are limited. But one of the grad students pointed out that we first need to show that the secondary structure does indeed block translation. Now she's given me information about a kit that should let us do just that, using cloned wildtype and mutant sxy sequences she's already made.

The kit uses the E. coli translation machinery to translate mRNAs that it makes from a user-provided DNA template with a T7 polymerase promoter. Her sxy clones have this T7 promoter - she's used it to synthesize the RNAs she's used for the RNase digestion analyses. We can use the sxy-1 and sxy-7 mutant RNAs to see if minor changes to the secondary structure change the ability of the E. coli ribosomes to start translation, and we can create a version that lacks most or all of the secondary structure as a positive control, to show how much translation happens in the absence of secondary structure.

It would be scientifically better to do this analysis with H. influenzae translation machinery (rather than E. coli), but we'd need to develop the system from scratch rather than using a kit, and I don't want us to invest that much work into this question. Once we know what happens with the E. coli kit, we can maybe try it with a H. influenzae cell-free lysate.

Our model for how the secondary structure of sxy mRNA regulates its expression proposes that the speed of progress of RNA polymerase along the gene determines whether the secondary structure forms before ribosomes can bind and start translating. So I was proposing to directly test whether RNA polymerase pauses or stalls, especially when nucleotides are limited. But one of the grad students pointed out that we first need to show that the secondary structure does indeed block translation. Now she's given me information about a kit that should let us do just that, using cloned wildtype and mutant sxy sequences she's already made.

The kit uses the E. coli translation machinery to translate mRNAs that it makes from a user-provided DNA template with a T7 polymerase promoter. Her sxy clones have this T7 promoter - she's used it to synthesize the RNAs she's used for the RNase digestion analyses. We can use the sxy-1 and sxy-7 mutant RNAs to see if minor changes to the secondary structure change the ability of the E. coli ribosomes to start translation, and we can create a version that lacks most or all of the secondary structure as a positive control, to show how much translation happens in the absence of secondary structure.

It would be scientifically better to do this analysis with H. influenzae translation machinery (rather than E. coli), but we'd need to develop the system from scratch rather than using a kit, and I don't want us to invest that much work into this question. Once we know what happens with the E. coli kit, we can maybe try it with a H. influenzae cell-free lysate.

Does competence help cells survive replication arrest?

In the previous post I described my hypothesis that the CRP-S regulon unites genes that, in different ways, help cells cope with running out of nucleotides for DNA synthesis (dNTPs). The DNA uptake genes help by getting deoxynucleotides (dNMPs) from an alternative source (DNA outside the cell) and the genes for various cytoplasmic proteins help by stabilizing the replication fork until the dNTP supply is restored.

So we really ought to try to directly test whether becoming competent helps cells survive sudden nucleotide shortage. I think I tried this a long time ago (when I was a post-doc in Ham Smith’s lab). Then I was hoping to show that DNA uptake helped cells survive transfer to MIV. I did establish a clear survival curve for cells in MIV. I also found out that adding DNA didn’t make much difference.

I tested various competence mutants we had then - these were miniTn10kan insertions that reduced competence by knocking out various genes. The only one that I remember made any difference to survival was the one we now know knocks out CRP. This is the one mutation in that set that is definitely regulatory - it prevents induction of all the CRP-S regulon genes, and all the CRP-N regulon genes too.

My hypothesis predicts that crp- cells would not turn on the genes that help them survive this crisis, and so should survive worse. BUT (as I recall) the crp mutant cells survived MIV much better than the wildtype cells! Yikes, this is the opposite of my prediction. I need to go back and reexamine this old data.

Hmmm... The old experiments had compared the number of cfu (cells capable of growing into colonies) after 100 minutes in MIV to those after about 16 hours (overnight) in MIV. I compared 6 mutants to the wildtype strain. Five of them survived better, and one much worse. But the 'much worse' strain isn't really a competence mutant at all - we now know its mutation knocks out a DNA topoisomerase that that non-specifically reduces the induction of many unrelated genes. Four of the mutants survive about 5-10-fold better, and the crp mutant survives about 100-fold better.

One concern is that my hypothesis is about short-term survival, in the emergency situation created by transfer to MIV, and so wouldn't necessarily apply to overnight survival. I haven't explicitly tested short-term survival, but the numbers of cfu at 100 minutes should be a rough indicator if this, as the cultures were all grown to about the same densities before being transferred to MIV. The old data don't show the kind of dramatic difference I would hope to see if my hypothesis is correct. Time to think about a rigorous test.

So we really ought to try to directly test whether becoming competent helps cells survive sudden nucleotide shortage. I think I tried this a long time ago (when I was a post-doc in Ham Smith’s lab). Then I was hoping to show that DNA uptake helped cells survive transfer to MIV. I did establish a clear survival curve for cells in MIV. I also found out that adding DNA didn’t make much difference.

I tested various competence mutants we had then - these were miniTn10kan insertions that reduced competence by knocking out various genes. The only one that I remember made any difference to survival was the one we now know knocks out CRP. This is the one mutation in that set that is definitely regulatory - it prevents induction of all the CRP-S regulon genes, and all the CRP-N regulon genes too.

My hypothesis predicts that crp- cells would not turn on the genes that help them survive this crisis, and so should survive worse. BUT (as I recall) the crp mutant cells survived MIV much better than the wildtype cells! Yikes, this is the opposite of my prediction. I need to go back and reexamine this old data.

Hmmm... The old experiments had compared the number of cfu (cells capable of growing into colonies) after 100 minutes in MIV to those after about 16 hours (overnight) in MIV. I compared 6 mutants to the wildtype strain. Five of them survived better, and one much worse. But the 'much worse' strain isn't really a competence mutant at all - we now know its mutation knocks out a DNA topoisomerase that that non-specifically reduces the induction of many unrelated genes. Four of the mutants survive about 5-10-fold better, and the crp mutant survives about 100-fold better.

One concern is that my hypothesis is about short-term survival, in the emergency situation created by transfer to MIV, and so wouldn't necessarily apply to overnight survival. I haven't explicitly tested short-term survival, but the numbers of cfu at 100 minutes should be a rough indicator if this, as the cultures were all grown to about the same densities before being transferred to MIV. The old data don't show the kind of dramatic difference I would hope to see if my hypothesis is correct. Time to think about a rigorous test.

Phage recombination

Way back, before we could measures changes in gene expression caused by transfer to MIV, we know that it induced two independently measurable phenotypic changes. The first was that cells became able to take up DNA and recombine some of it into the chromosome. The other was that phage recombination was increased about 100-fold.

What is ‘phage recombination’? When cells are co-infected with two different mutant strains of the same phage, their DNAs can recombine in the cell while they are replicating, giving rise to some phage genomes that have neither mutation. For H. influenzae, we used three different temperature-sensitive mutants of the phage HP1, which could form plaques at 32°C but not at 40°C. Co-infecting non-competent cells with any two of these gave a very low frequency of recombinant phage, measured as plaques on lawns of cells grown at 40°C. But co-infecting competent cells gave about 100-fold more recombinant plaques.

This increased phage recombination was interpreted (by me and others) as evidence that transfer to MIV induced both DNA-uptake machinery and recombination machinery. I was looking for a way to distinguish between mutations that just knocked out a component of the uptake machinery and those that knocked out the regulatory machinery cells used to decide to become competent. So I used phage recombination to categorize mutants as regulatory or mechanistic - hypothesizing that mutations that eliminated both uptake and phage recombination were probably regulatory, as they affected two independent processes, whereas mutations that knocked out uptake but still induced phage recombination probably affected just a component of the uptake machinery.

Now we know which genes are induced by transfer to MIV. None of them qualify as recombination machinery. But this wasn't bothering bother me for two reasons. First, I’d decided that cells take up DNA as a source of nutrients, so I didn’t expect recombination to be induced. Second, I’d forgotten all about the induction of phage recombination and my former interpretation of it.

But today I was reading my old (before we did microarrays) notes about all the competence genes we knew of, and one note mentioned a mutant that had very little phage recombination but that I now know is part of the CRP-S regulon. This gene is comM; it specifies a protein that somehow protects incoming DNA from being degraded by a nuclease or nucleases present in the cytoplasm. It does DNA uptake but its transformation frequency is very low because the DNA is degraded before it can recombine.

EUREKA! This makes sense. The reason phage DNAs can recombine better in normal competent cells than in non-competent cells is that competence induces comM, and the ComM protein prevents the nuclease from degrading the phage DNA recombination intermediates. Recombination intermediates have unusual DNA structures (exposed single strands, ends of strands, and four-way connections called Holliday junctions) which are vulnerable to general and specialized nucleases.

Another gene, dprA, does something similar. Mutations in dprA also allow incoming DNA to be rapidly degraded, and DNA brought in by the DNA uptake machinery doesn’t survive long enough to recombine with the chromosome. In my old notes I think I have data for its phage recombination - I bet it’s low. (Later - yes, it is.)

I have been hypothesizing (without any direct evidence) that both comM and dprA have evolved to be competence-induced because their jobs are to protect stalled replication forks from nucleases. Stalled replication forks have a lot in common with recombination intermediates; they often get into tangles that are structurally indistinguishable from the Holliday intermediates produced by recombination. I wonder if I can use phage recombination in some way to help sort this out?

(Note to self: The above sounds great, but don't forget the complication that the rec2 mutant also has low phage recombination, which is unexpected because we've been pretty sure it acts in DNA transport across the inner membrane.)

What is ‘phage recombination’? When cells are co-infected with two different mutant strains of the same phage, their DNAs can recombine in the cell while they are replicating, giving rise to some phage genomes that have neither mutation. For H. influenzae, we used three different temperature-sensitive mutants of the phage HP1, which could form plaques at 32°C but not at 40°C. Co-infecting non-competent cells with any two of these gave a very low frequency of recombinant phage, measured as plaques on lawns of cells grown at 40°C. But co-infecting competent cells gave about 100-fold more recombinant plaques.

This increased phage recombination was interpreted (by me and others) as evidence that transfer to MIV induced both DNA-uptake machinery and recombination machinery. I was looking for a way to distinguish between mutations that just knocked out a component of the uptake machinery and those that knocked out the regulatory machinery cells used to decide to become competent. So I used phage recombination to categorize mutants as regulatory or mechanistic - hypothesizing that mutations that eliminated both uptake and phage recombination were probably regulatory, as they affected two independent processes, whereas mutations that knocked out uptake but still induced phage recombination probably affected just a component of the uptake machinery.

Now we know which genes are induced by transfer to MIV. None of them qualify as recombination machinery. But this wasn't bothering bother me for two reasons. First, I’d decided that cells take up DNA as a source of nutrients, so I didn’t expect recombination to be induced. Second, I’d forgotten all about the induction of phage recombination and my former interpretation of it.

But today I was reading my old (before we did microarrays) notes about all the competence genes we knew of, and one note mentioned a mutant that had very little phage recombination but that I now know is part of the CRP-S regulon. This gene is comM; it specifies a protein that somehow protects incoming DNA from being degraded by a nuclease or nucleases present in the cytoplasm. It does DNA uptake but its transformation frequency is very low because the DNA is degraded before it can recombine.

EUREKA! This makes sense. The reason phage DNAs can recombine better in normal competent cells than in non-competent cells is that competence induces comM, and the ComM protein prevents the nuclease from degrading the phage DNA recombination intermediates. Recombination intermediates have unusual DNA structures (exposed single strands, ends of strands, and four-way connections called Holliday junctions) which are vulnerable to general and specialized nucleases.

Another gene, dprA, does something similar. Mutations in dprA also allow incoming DNA to be rapidly degraded, and DNA brought in by the DNA uptake machinery doesn’t survive long enough to recombine with the chromosome. In my old notes I think I have data for its phage recombination - I bet it’s low. (Later - yes, it is.)

I have been hypothesizing (without any direct evidence) that both comM and dprA have evolved to be competence-induced because their jobs are to protect stalled replication forks from nucleases. Stalled replication forks have a lot in common with recombination intermediates; they often get into tangles that are structurally indistinguishable from the Holliday intermediates produced by recombination. I wonder if I can use phage recombination in some way to help sort this out?

(Note to self: The above sounds great, but don't forget the complication that the rec2 mutant also has low phage recombination, which is unexpected because we've been pretty sure it acts in DNA transport across the inner membrane.)

Too many questions

I just numbered the questions my draft grant proposal proposes to answer, and found that I have 19 questions! This is far too many, unless I'm asking for a zillion dollars, and I know I don't have the skills to administer that big a project.

But some of the questions are much more important and much more substantial than others. So I'll turn some of them into 'subsidiary questions' and "if time permits" questions. I would rather not simply leave them out, because then the grant panel will just complain "Why isn't she proposing to do X?".

But some of the questions are much more important and much more substantial than others. So I'll turn some of them into 'subsidiary questions' and "if time permits" questions. I would rather not simply leave them out, because then the grant panel will just complain "Why isn't she proposing to do X?".

The controversy surrounding the function of DNA uptake

I'm holed up in Indio California, in a "RV Resort" for retirees, working on my grant proposal and checking out the local attractions (Washingtonia palms! The Salton Sea!).

Here's a few paragraphs from the proposal introduction, explaining the big question:

The consequences of DNA uptake are not in question. A cell that takes up DNA inevitably incurs the physiological costs of becoming competent and of transporting the DNA across its envelope. The cell also inevitably gets the incoming DNA’s nucleotides, reducing the demands on its biosynthetic or salvage pathways. Because DNA is abundant in natural environments, and nucleotides are very expensive to synthesize, the nucleotide benefit may be sufficient to compensate for the costs and thus to explain the evolution (origin and continuation) of competence. However if the incoming DNA recombines with the chromosome it may also change the cell’s genotype, which may increase or decrease the cell’s ability to survive and reproduce. The controversial question is whether natural selection on the machinery and regulation of DNA uptake has been influenced by these genetic consequences.

The conventional view is that bacteria take up DNA for recombination (i.e., that recombination has net benefits, and that these are sufficient to account for the evolution of competence). This derives partly from the now-discredited idea that sex in eukaryotes is easily explained by long-term benefits to the species, and partly from observation of ancient beneficial recombination events in bacterial genomes and recent ones in the laboratory. But there are also substantial costs to genetic recombination, because the homologous DNA in the environment comes from dead cells and is likely to carry excess deleterious mutations, and because recombination with heterologous DNA will usually the cell’s well-adapted genetic machinery. These genetic costs are easily overlooked because natural selection eliminates the cells incurring them.

Understanding the evolution of bacterial competence has major implications for our present far-from-satisfactory understanding of why sexual reproduction evolved in eukaryotes. The problematic hypothesis that meiotic sex evolved to create new combinations of genes is often supported by claims that bacterial ‘parasexual’ processes also evolved for this. Because conjugation and transduction are now known to be side effects of selection for more immediate benefits to cells or their genetic parasites, understanding competence is key. If the genetic consequences of competence have not shaped its mechanism or regulation, we will conclude that bacteria get all the genetic variation they need by accident, and thus that meiotic sex is a eukaryotic solution to a eukaryotic problem.

Direct experimental testing of proposed costs and benefits is not the best approach, because it is all too easy to create selection in bacterial cultures, and because laboratory conditions in no way replicate those of the natural environment. Rather, the best way to understand the evolution of competence is to understand its regulation and mechanism. Regulation is informative for all bacteria, as the genes that regulate competence evolved in the natural environment, and understanding the signals they respond to will give us a window on the consequences of competence that have been most beneficial. Because H. influenzae’s uptake specificity causes it to preferentially take up its own DNA, understanding the uptake mechanism responsible for this bias is a critical test of the importance of recombination.

Here's a few paragraphs from the proposal introduction, explaining the big question:

The consequences of DNA uptake are not in question. A cell that takes up DNA inevitably incurs the physiological costs of becoming competent and of transporting the DNA across its envelope. The cell also inevitably gets the incoming DNA’s nucleotides, reducing the demands on its biosynthetic or salvage pathways. Because DNA is abundant in natural environments, and nucleotides are very expensive to synthesize, the nucleotide benefit may be sufficient to compensate for the costs and thus to explain the evolution (origin and continuation) of competence. However if the incoming DNA recombines with the chromosome it may also change the cell’s genotype, which may increase or decrease the cell’s ability to survive and reproduce. The controversial question is whether natural selection on the machinery and regulation of DNA uptake has been influenced by these genetic consequences.

The conventional view is that bacteria take up DNA for recombination (i.e., that recombination has net benefits, and that these are sufficient to account for the evolution of competence). This derives partly from the now-discredited idea that sex in eukaryotes is easily explained by long-term benefits to the species, and partly from observation of ancient beneficial recombination events in bacterial genomes and recent ones in the laboratory. But there are also substantial costs to genetic recombination, because the homologous DNA in the environment comes from dead cells and is likely to carry excess deleterious mutations, and because recombination with heterologous DNA will usually the cell’s well-adapted genetic machinery. These genetic costs are easily overlooked because natural selection eliminates the cells incurring them.

Understanding the evolution of bacterial competence has major implications for our present far-from-satisfactory understanding of why sexual reproduction evolved in eukaryotes. The problematic hypothesis that meiotic sex evolved to create new combinations of genes is often supported by claims that bacterial ‘parasexual’ processes also evolved for this. Because conjugation and transduction are now known to be side effects of selection for more immediate benefits to cells or their genetic parasites, understanding competence is key. If the genetic consequences of competence have not shaped its mechanism or regulation, we will conclude that bacteria get all the genetic variation they need by accident, and thus that meiotic sex is a eukaryotic solution to a eukaryotic problem.

Direct experimental testing of proposed costs and benefits is not the best approach, because it is all too easy to create selection in bacterial cultures, and because laboratory conditions in no way replicate those of the natural environment. Rather, the best way to understand the evolution of competence is to understand its regulation and mechanism. Regulation is informative for all bacteria, as the genes that regulate competence evolved in the natural environment, and understanding the signals they respond to will give us a window on the consequences of competence that have been most beneficial. Because H. influenzae’s uptake specificity causes it to preferentially take up its own DNA, understanding the uptake mechanism responsible for this bias is a critical test of the importance of recombination.

Making lemonade

Plasmids carrying the sxy gene often acquire mutations; we have learned (painfully) that we need to recheck their sequences before using them in experiments. Our assumption has been that the Sxy protein is harmful to cells, at least when inappropriately expressed, and that the mutations are selected because they make this expression less harmful. I’ve always just treated this as a nuisance (a major nuisance), an obstacle to be tolerated because I haven’t seen any way to overcome it.

But last night I realized that we might be able to use it as a probe into what Sxy does. Although it's possible that Sxy’s toxic effects on cells have nothing to do with how Sxy induces expression of CRP-S genes, it’s more likely that the two effects are connected.

One obvious candidate connection is that Sxy affects how CRP acts, and perturbs CRP’s normal contributions to maintaining the cell's carbon and energy balance. An even more obvious candidate would be that inappropriate expression of CRP-S genes is toxic. (However the hypercompetence mutations cause such expression without being detectably toxic...) A less obvious but more exciting candidate is that Sxy activates transcription by interacting with RNA polymerase (or a general transcription factor), and that inappropriate expression interferes with transcription at other genes.

So the simple experiment is to propagate a sxy-expression plasmid in H. influenzae (or E. coli) without population bottlenecks (i.e. in a large culture grown for many generations), plate for single colonies, and isolate plasmids and sequence inserts from multiple colonies.

This will probably give a mix of obvious loss-of-function mutations (stop codons, deletions) and amino acid substitution mutations. My recollection of the mutations we’ve seen in the past is that they were mostly substitutions, which is good as these will be the interesting ones. If the majority are loss-of-function mutations we might want a way to screen these out before sequencing. We could do this if we started with a sxy- mutant, although this would need to be a complete deletion so that it wouldn’t recombine with the sxy gene on the plasmid. How would we screen them? Would screening for function be more trouble than it’s worth? I guess this would depend on how common the loss-of-function mutations turned out to be.

Mutations creating stop codons are expected to arise less frequently than simple substitutions (only three of the 64 codons specify STOP). Deletions are also expected to be relatively rare, at least in the absence of predisposing short repeats. So if we found that the majority are loss-of-function mutations, this might itself be our answer. This would tell us that Sxy is intrinsically harmful, and that getting rid of Sxy entirely is much more effective than changing its sequence.

So the first approach would be to sequence every plasmid that was isolated from a reasonably large colony. (Choosing large colonies will reduce the frequency of unchanged inserts.) Then we would compare changes, looking for clustering of substitution mutations. [This sounds like a good project for an undergraduate.] And we would use our anti-Sxy antibody to confirm that the plasmids with substitution mutations still produce full-length Sxy protein.

Then we would characterize the effects of the mutations on Sxy’s ability to induce CRP-S genes, probably by transforming the mutant version into wildtype cells and doing competence assays. We'd also look for effects on any other properties of Sxy we have a handle on, such as pull-down of complexed proteins, or two-hybrid interactions.

But last night I realized that we might be able to use it as a probe into what Sxy does. Although it's possible that Sxy’s toxic effects on cells have nothing to do with how Sxy induces expression of CRP-S genes, it’s more likely that the two effects are connected.

One obvious candidate connection is that Sxy affects how CRP acts, and perturbs CRP’s normal contributions to maintaining the cell's carbon and energy balance. An even more obvious candidate would be that inappropriate expression of CRP-S genes is toxic. (However the hypercompetence mutations cause such expression without being detectably toxic...) A less obvious but more exciting candidate is that Sxy activates transcription by interacting with RNA polymerase (or a general transcription factor), and that inappropriate expression interferes with transcription at other genes.

So the simple experiment is to propagate a sxy-expression plasmid in H. influenzae (or E. coli) without population bottlenecks (i.e. in a large culture grown for many generations), plate for single colonies, and isolate plasmids and sequence inserts from multiple colonies.

This will probably give a mix of obvious loss-of-function mutations (stop codons, deletions) and amino acid substitution mutations. My recollection of the mutations we’ve seen in the past is that they were mostly substitutions, which is good as these will be the interesting ones. If the majority are loss-of-function mutations we might want a way to screen these out before sequencing. We could do this if we started with a sxy- mutant, although this would need to be a complete deletion so that it wouldn’t recombine with the sxy gene on the plasmid. How would we screen them? Would screening for function be more trouble than it’s worth? I guess this would depend on how common the loss-of-function mutations turned out to be.

Mutations creating stop codons are expected to arise less frequently than simple substitutions (only three of the 64 codons specify STOP). Deletions are also expected to be relatively rare, at least in the absence of predisposing short repeats. So if we found that the majority are loss-of-function mutations, this might itself be our answer. This would tell us that Sxy is intrinsically harmful, and that getting rid of Sxy entirely is much more effective than changing its sequence.

So the first approach would be to sequence every plasmid that was isolated from a reasonably large colony. (Choosing large colonies will reduce the frequency of unchanged inserts.) Then we would compare changes, looking for clustering of substitution mutations. [This sounds like a good project for an undergraduate.] And we would use our anti-Sxy antibody to confirm that the plasmids with substitution mutations still produce full-length Sxy protein.

Then we would characterize the effects of the mutations on Sxy’s ability to induce CRP-S genes, probably by transforming the mutant version into wildtype cells and doing competence assays. We'd also look for effects on any other properties of Sxy we have a handle on, such as pull-down of complexed proteins, or two-hybrid interactions.

Does PurR repression explain the 'FC' results?

A couple of months ago I posted about the 'fraction competent' (FC) assay we use to tell whether only some of the cells in a culture are able to take up DNA. I mentioned that, when we assay cultures that are only partially competent (i.e. have lower transformation frequencies than maximally-induced cultures), we find this to be because some of the cells are taking up multiple DNA fragments and the rest aren't taking up any at all.

This was surprising, as I'd expected that the low transformation frequencies of such 'partially-induced' cultures would be because the cells were all only a little bit competent. We see this max-or-nothing pattern in not only in wild-type cells under poorly-inducing conditions, but also in low-competence mutants under fully inducing conditions and in hypercompetent mutants under what are otherwise non-inducing conditions. I've had this puzzling result hanging around in the back of my brain for about 15 years.

I've always thought that it reflected something about the action of adenylate cyclase, CRP or Sxy, the proteins whose actions control expression of all the genes in the competence (CRP-S) regulon. But yesterday we were talking about how the PurR repressor represses only one of the competence genes (rec-2), and I realized that, because the fraction-competent assays measure only transformation, the max-or-nothing could reflect the activity of a single gene instead or the whole regulon.

So maybe all the cells in our partially-induced cultures have turned on all the competence genes except rec-2, but only in some of them have levels of purines fallen low enough to inactivate the PurR repressor and turn on rec-2. This might be tested by repeating the FC assays on cells whose purR gene is knocked out. But first I need to check the notes from the grad student who created the purR knockout, to see if he already tested this.

This was surprising, as I'd expected that the low transformation frequencies of such 'partially-induced' cultures would be because the cells were all only a little bit competent. We see this max-or-nothing pattern in not only in wild-type cells under poorly-inducing conditions, but also in low-competence mutants under fully inducing conditions and in hypercompetent mutants under what are otherwise non-inducing conditions. I've had this puzzling result hanging around in the back of my brain for about 15 years.

I've always thought that it reflected something about the action of adenylate cyclase, CRP or Sxy, the proteins whose actions control expression of all the genes in the competence (CRP-S) regulon. But yesterday we were talking about how the PurR repressor represses only one of the competence genes (rec-2), and I realized that, because the fraction-competent assays measure only transformation, the max-or-nothing could reflect the activity of a single gene instead or the whole regulon.

So maybe all the cells in our partially-induced cultures have turned on all the competence genes except rec-2, but only in some of them have levels of purines fallen low enough to inactivate the PurR repressor and turn on rec-2. This might be tested by repeating the FC assays on cells whose purR gene is knocked out. But first I need to check the notes from the grad student who created the purR knockout, to see if he already tested this.

Does the USS wrap around the pilus?

Something got me thinking that, rather than kinking during uptake, the USS might facilitate DNA uptake by wrapping itself around the 'type IV' pilus.

We know that these pilus filaments on the cell surface are required for DNA uptake in many bacteria including H. influenzae. Some of these bacteria make long pili, and others (including our H. influenzae strain) just make what are probably short stubs, too short to be seen by scanning electron microscopy. We also know that DNA can bind to the pili of Pseudomonas aeruginosa.

I have been discounting this idea because I remember reading an article that, in a species whose uptake had sequence specificity, substituting the normal pilin gene with one from a species with no uptake specificity didn't change the cell's uptake specificity. BUT, I can't remember the details, and I can't find the article, so maybe I was wrong. The bacteria were probably Neisseria gonorrhoeae or Neisseria meningitidis; it wasn't H. influenzae or one of its close relatives, and the Neisserias are the other group of bacteria with uptake specificity. I should probably email either the Neisseria people or the woman who did the pilin-binding work in P. aeruginosa to see if they can point me to the paper. In any case, the Neisseria USS is unrelated to the H. influenzae USS, so I should consider testing this for H. influenzae.

I was thinking that the DNA would need to wrap 'paranemically' around the pilus - this term means that the DNA and the pilus aren't topologically interlinked like links in a chain, but just snuggle up to each other like... like... (can't think of a good analogy). But if the pili are just short stubs this distinction doesn't matter.

How could we test this? The conceptually simplest way (i.e. the only idea I have right now) is to mix USS-containing and control DNAs (circular? linear?) with purified H. influenzae and look for evidence of conformation change ( pililigatability of the ends) or of DNA-protein interactions (protection from nucleases? interference by ethylation?).

Unfortunately there's no way to purify the hypothetical short stub pili that our strain probably makes, but I can think of several possible solutions. One is to overexpress the pilin gene (pilA) under conditions where pili can assemble. This might work in our H. influenzae strain, or we could overexpress the gene in a different species whose own pilin gene is knocked out. If the pilin subunits won't assemble into pili, we might be able to purify the pilin subunits from such an overproducing strain and make them reassemble in vitro. Maybe the best solution would be to use a different strain, as some H. influenzae strains are reported to produce long pili.

(Hooray! While searching for the paper about H. influenzae strains that produce type 4 pili, I found the paper that showed that replacing a Neisseria pilin with one from P. aeruginosa didn't change uptake specificity. I'll need to read it carefully - it's quite dense.) I found the H. influenzae pili paper, and the strain they describe is one that we have in the lab. The authors don't report purifying the pili, but maybe we wouldn't need to do this. The next step is to get in touch with them, in case they are working on pilin-DNA interactions themselves. We wouldn't want to compete with them, but maybe we can collaborate.

We know that these pilus filaments on the cell surface are required for DNA uptake in many bacteria including H. influenzae. Some of these bacteria make long pili, and others (including our H. influenzae strain) just make what are probably short stubs, too short to be seen by scanning electron microscopy. We also know that DNA can bind to the pili of Pseudomonas aeruginosa.

I have been discounting this idea because I remember reading an article that, in a species whose uptake had sequence specificity, substituting the normal pilin gene with one from a species with no uptake specificity didn't change the cell's uptake specificity. BUT, I can't remember the details, and I can't find the article, so maybe I was wrong. The bacteria were probably Neisseria gonorrhoeae or Neisseria meningitidis; it wasn't H. influenzae or one of its close relatives, and the Neisserias are the other group of bacteria with uptake specificity. I should probably email either the Neisseria people or the woman who did the pilin-binding work in P. aeruginosa to see if they can point me to the paper. In any case, the Neisseria USS is unrelated to the H. influenzae USS, so I should consider testing this for H. influenzae.

I was thinking that the DNA would need to wrap 'paranemically' around the pilus - this term means that the DNA and the pilus aren't topologically interlinked like links in a chain, but just snuggle up to each other like... like... (can't think of a good analogy). But if the pili are just short stubs this distinction doesn't matter.

How could we test this? The conceptually simplest way (i.e. the only idea I have right now) is to mix USS-containing and control DNAs (circular? linear?) with purified H. influenzae and look for evidence of conformation change ( pililigatability of the ends) or of DNA-protein interactions (protection from nucleases? interference by ethylation?).

Unfortunately there's no way to purify the hypothetical short stub pili that our strain probably makes, but I can think of several possible solutions. One is to overexpress the pilin gene (pilA) under conditions where pili can assemble. This might work in our H. influenzae strain, or we could overexpress the gene in a different species whose own pilin gene is knocked out. If the pilin subunits won't assemble into pili, we might be able to purify the pilin subunits from such an overproducing strain and make them reassemble in vitro. Maybe the best solution would be to use a different strain, as some H. influenzae strains are reported to produce long pili.

(Hooray! While searching for the paper about H. influenzae strains that produce type 4 pili, I found the paper that showed that replacing a Neisseria pilin with one from P. aeruginosa didn't change uptake specificity. I'll need to read it carefully - it's quite dense.) I found the H. influenzae pili paper, and the strain they describe is one that we have in the lab. The authors don't report purifying the pili, but maybe we wouldn't need to do this. The next step is to get in touch with them, in case they are working on pilin-DNA interactions themselves. We wouldn't want to compete with them, but maybe we can collaborate.

Fructose? Why not glucose?

One of the grad students has new data showing that the active form of the regulatory protein CRP is needed not only for expression of the CRP-S genes coregulated by the Sxy protein, but for expression of Sxy itself. This has reminded me of a puzzle in our understanding of how cyclic AMP (cAMP), the activator of CRP, depends on sugar uptake.

Many bacteria have evolved to use cAMP as a signal that supplies of energy and carbon are running low, and the concentration of cAMP in the cell is controlled mainly by a sugar-uptake pathway called the phosphotransferase system (PTS). This is a cluster of membrane-associated proteins that bind specific sugars in the cell's environment, bring them across the membrane, and stick a phosphate group onto them so they can't leak back out. Each kind of PTS sugar (glucose, fructose etc.) has one or two specialized proteins to take it up, in addition to the generalist proteins that bring in the phosphate and set the stage. (Some other sugars don't use the PTS at all - these are usually less common sugars.)

The PTS uses the availability of its sugars to control the synthesis of cAMP. If lots of sugar is being transported, no cAMP is made. But if sugar supply runs out, the PTS stimulates synthesis of cAMP. This in turn activates CRP to turn on genes for using other (non-PTS) sugars and for conserving other energy resources. Bacteria differ in the sugars their PTS systems can handle, depending on the environment they're adapted to. E. coli, for example, has PTS uptake proteins for many different sugars, because it lives in the gut.

When the H. influenzae genome sequence first became available, we checked it for genes encoding PTS transport proteins. We were a bit surprised to find only proteins for transporting one sugar, and more surprised that this sugar was fructose, not glucose. Because we had shown that H. influenzae uses its PTS to control cAMP levels and thus to control the activity CRP, this meant that the availability of fructose was a major factor in the cell's decision to take up DNA.

This was surprising because I had assumed that glucose was the primary sugar in human bodily fluids, including respiratory mucus (H. influenzae's environment). I was told that in fact fructose might have evolutionarily precedents - the ancestral PTS may have transported fructose. And I found out that there was significant fructose in at least some bodily fluids, though not much in our blood unless we'd been consuming sugar (sucrose is a glucose+fructose dimer ). Nevertheless fructose seemed an odd choice for the sugar regulating CRP activity, and I kept wondering whether the absence of a glucose PTS uptake protein was a peculiarity of the lab strain of H. influenzae rather than a general property of the species.

That was 11 years ago, and now we have genome sequences of several H. influenzae strains and of 8 or 9 other Pasteurellacean species. So I did some searching for the glucose and fructose transporters, and found that I was wrong. None of the other H. influenzae strains have genes for glucose PTS proteins, and neither do about half of the other species in H. influenzae's family. Nor do they all have genes for fructose PTS proteins. The distributions of these genes doesn't perfectly match the phylogenetic relationships of the species, suggesting that genes may have been lost (or gained) several times. (Ravi Barabote and Milton Saier review the PTS genes in all bacteria: (2005) MMBR 69:608-634.)

So I'm still perplexed. CRP regulates a large number of genes in H. influenzae, and I would think there would be strong selection to optimize the regulatory machinery that decides when these genes should be turned on. But these bacteria seem to have been very cavalier (careless) in looking after the PTS genes that control this decision. This might mean that PTS regulation of cAMP isn't really such a big deal, or it might mean that the bacteria know things I don't about how glucose and fructose levels vary in their environments.

Many bacteria have evolved to use cAMP as a signal that supplies of energy and carbon are running low, and the concentration of cAMP in the cell is controlled mainly by a sugar-uptake pathway called the phosphotransferase system (PTS). This is a cluster of membrane-associated proteins that bind specific sugars in the cell's environment, bring them across the membrane, and stick a phosphate group onto them so they can't leak back out. Each kind of PTS sugar (glucose, fructose etc.) has one or two specialized proteins to take it up, in addition to the generalist proteins that bring in the phosphate and set the stage. (Some other sugars don't use the PTS at all - these are usually less common sugars.)

The PTS uses the availability of its sugars to control the synthesis of cAMP. If lots of sugar is being transported, no cAMP is made. But if sugar supply runs out, the PTS stimulates synthesis of cAMP. This in turn activates CRP to turn on genes for using other (non-PTS) sugars and for conserving other energy resources. Bacteria differ in the sugars their PTS systems can handle, depending on the environment they're adapted to. E. coli, for example, has PTS uptake proteins for many different sugars, because it lives in the gut.

When the H. influenzae genome sequence first became available, we checked it for genes encoding PTS transport proteins. We were a bit surprised to find only proteins for transporting one sugar, and more surprised that this sugar was fructose, not glucose. Because we had shown that H. influenzae uses its PTS to control cAMP levels and thus to control the activity CRP, this meant that the availability of fructose was a major factor in the cell's decision to take up DNA.

This was surprising because I had assumed that glucose was the primary sugar in human bodily fluids, including respiratory mucus (H. influenzae's environment). I was told that in fact fructose might have evolutionarily precedents - the ancestral PTS may have transported fructose. And I found out that there was significant fructose in at least some bodily fluids, though not much in our blood unless we'd been consuming sugar (sucrose is a glucose+fructose dimer ). Nevertheless fructose seemed an odd choice for the sugar regulating CRP activity, and I kept wondering whether the absence of a glucose PTS uptake protein was a peculiarity of the lab strain of H. influenzae rather than a general property of the species.

That was 11 years ago, and now we have genome sequences of several H. influenzae strains and of 8 or 9 other Pasteurellacean species. So I did some searching for the glucose and fructose transporters, and found that I was wrong. None of the other H. influenzae strains have genes for glucose PTS proteins, and neither do about half of the other species in H. influenzae's family. Nor do they all have genes for fructose PTS proteins. The distributions of these genes doesn't perfectly match the phylogenetic relationships of the species, suggesting that genes may have been lost (or gained) several times. (Ravi Barabote and Milton Saier review the PTS genes in all bacteria: (2005) MMBR 69:608-634.)

So I'm still perplexed. CRP regulates a large number of genes in H. influenzae, and I would think there would be strong selection to optimize the regulatory machinery that decides when these genes should be turned on. But these bacteria seem to have been very cavalier (careless) in looking after the PTS genes that control this decision. This might mean that PTS regulation of cAMP isn't really such a big deal, or it might mean that the bacteria know things I don't about how glucose and fructose levels vary in their environments.

GeneSpring not needed?

Several years ago we did quite a lot of microarray work, looking at changes in gene expression as wildtype cells developed competence and in response to various mutations and culture conditions. The analysis was greatly helped by use of GeneSpring software, which displayed the results in various intuitive and insight-increasing ways.

But the GeneSpring company charged us about $3500 ($US) per year to run their software on a single computer, so when the work was done we let the subscription lapse. Lately I've been wanting to look at some of the old microarray data, but dreading the hassle involved in getting GeneSpring set up again. We wouldn't need to pay for a subscription, as another lab in the BARN research group has it and would let us use it. But my past experience setting up our data on Genespring means that I expect hours and hours of fussing and fiddling with incompatibilities and upgrades and emailing tech support and the people in London who made the arrays, etc.

But this afternoon I realized that I don't need GeneSpring to look at the data. We have simple text files of the array data that I can open in Excel. I won't see pretty visualizations of genes and sequences and colour-coded expression ratios, but I can look up the numbers for the signal intensities of different genes under different conditions.

If I needed to look at a lot of genes, or under a lot of conditions, setting up GeneSpring would be worth the trouble. But I think I only need to check a few critical genes under a couple of conditions. The only problem will be finding the files I need, and I'm pretty sure I know where they are.

But the GeneSpring company charged us about $3500 ($US) per year to run their software on a single computer, so when the work was done we let the subscription lapse. Lately I've been wanting to look at some of the old microarray data, but dreading the hassle involved in getting GeneSpring set up again. We wouldn't need to pay for a subscription, as another lab in the BARN research group has it and would let us use it. But my past experience setting up our data on Genespring means that I expect hours and hours of fussing and fiddling with incompatibilities and upgrades and emailing tech support and the people in London who made the arrays, etc.

But this afternoon I realized that I don't need GeneSpring to look at the data. We have simple text files of the array data that I can open in Excel. I won't see pretty visualizations of genes and sequences and colour-coded expression ratios, but I can look up the numbers for the signal intensities of different genes under different conditions.

If I needed to look at a lot of genes, or under a lot of conditions, setting up GeneSpring would be worth the trouble. But I think I only need to check a few critical genes under a couple of conditions. The only problem will be finding the files I need, and I'm pretty sure I know where they are.

Transformasomes?

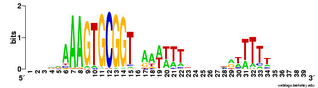

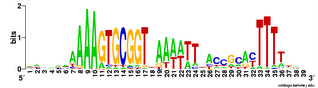

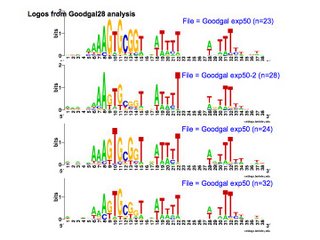

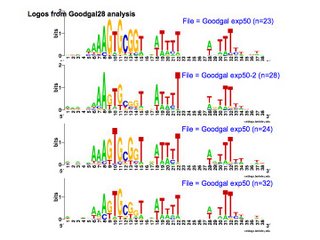

At one time DNA uptake was thought to be mediated by membrane-bound vesicles called transformasomes (Goodgal and Smith lab papers). These structures appear as surface 'blebs' in electron mocrographs of competent H. influenzae and H. parainfluenzae and to a lesser extent on non-competent cells. Blebs often contain DNA which is inaccessible both to externally added nucleases and to the restriction enzymes present in the cell's cytoplasm. They appear to be internalized on DNA uptake (only in H. para?), and are shed into the medium by some competence mutants and when normal cells lost competence. Purified blebs bind DNA tightly and with the same specificity as intact cells but do not internalize it (???).