I've been wondering how interdependent the different parts of the uptake machinery are.

We have long known that some H. influenzae mutants (knockouts of rec2 or comF) could take up DNA but not translocate it into the cytoplasm. They take up about the same amounts of DNA as do wildtype cells, but the DNA stays intact (double-stranded, not degraded). Because this DNA doesn't get cut by the nucleases we know to be active in the cytoplasm, the DNA must be accumulating in the periplasm. This means that the uptake machinery can continue to operate in the absence of translocation.

What about ComE1? This H. influenzae protein is a homolog of the well-characterized B. subtilis protein ComEA. In B. subtilis, ComEA is thought to sit on the periplasmic side of the membrane, under the thick cell wall (there is no outer membrane). The pseudopilus machinery (specified by the ComG proteins) passes DNA across the cell wall to ComEA, and ComEA passes the DNA to the membrane transport machinery (homologs of Rec2 and ComF).

ComEA is essential for DNA uptake in B. subtilis, and its homologs are essential in other competent bacteria. But the H. influenzae homolog, ComE1,is not essential - knockouts reduce transformation by only about 10-fold, and reduce uptake by only about 7-fold. Nevertheless, let's assume that ComE1 does more or less the same thing that ComEA does - accepts DNA from the pseudopilus and passes the DNA on to the machinery that moves it across the inner membrane.

Here's the question: Can the pseudopilus keep doing its job (reeling DNA in) even if ComE1 isn't passing the DNA on? Put another way, do mutations in comE1 reduce DNA uptake, or are they like mutations in rec2 and comF? I already told you the answer - a comE1 knockout reduces DNA uptake as well as transformation. We've known this for several years, but I only now put it into the context of the newer B. subtilis results.

So this means that my model of DNA uptake has to include ComE1 accepting the DNA from the pseudopilus and passing it on to Rec2 and ComF if they're available, or letting the DNA pile up in the periplasm if they're not. If ComE1 isn't there, the pseudopilus stalls. The residual transformation we see in cells lacking ComE1 probably means that about 10% of the DNA finds its way from the pseudopilus to the translocation machinery even in the absence of ComE1, and that this machinery can transport DNA that hasn't been handled by ComE1.

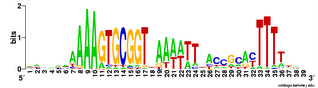

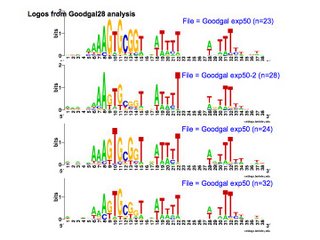

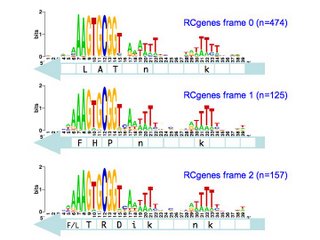

This interpretation makes a prediction about an experiment we've already done - that the reduced DNA uptake seen in comE1 mutants is still USS-dependent. And that's what we see. There's another prediction, that comE1 mutants should not be defective in the initiation of uptake (passing the initial loop through the pore) but only in the subsequent reeling in of the DNA by the pseudopilus. Perhaps we can test this using laser-tweezers, or by cross-linking analysis. From this perspective the comE1 mutant may be very useful in helping us dissect steps of uptake, as it would cause uptake to stall or slow.

This is all very satisfying. We've had the comE1 data for a long time, and tried to write it up, but haven't published it because it didn't seem to explain anything. I think our confusion arose from trying to distinguish between 'binding' and 'uptake' of DNA, whereas now I'm distinguishing between the 'initiation' and 'continuation' of uptake. Now we have a framework for these results, they will make a nice little paper. Unfortunately the technician and M.Sc. student who did the work are long gone so they can't help rewrite the draft, and some of their experiments should be repeated and expanded a bit. Luckily one of the present post-docs is doing similar experiments on other strains, and it would probably be quite simple for her to do the needed experiments and help finish the paper (on which she would then be first author).

Change of address

3 days ago in Catalogue of Organisms