- Home

- Angry by Choice

- Catalogue of Organisms

- Chinleana

- Doc Madhattan

- Games with Words

- Genomics, Medicine, and Pseudoscience

- History of Geology

- Moss Plants and More

- Pleiotropy

- Plektix

- RRResearch

- Skeptic Wonder

- The Culture of Chemistry

- The Curious Wavefunction

- The Phytophactor

- The View from a Microbiologist

- Variety of Life

Field of Science

-

-

Earth Day: Pogo and our responsibility2 months ago in Doc Madhattan

-

What I Read 20243 months ago in Angry by Choice

-

I've moved to Substack. Come join me there.4 months ago in Genomics, Medicine, and Pseudoscience

-

-

The Site is Dead, Long Live the Site2 years ago in Catalogue of Organisms

-

The Site is Dead, Long Live the Site2 years ago in Variety of Life

-

-

-

-

Histological Evidence of Trauma in Dicynodont Tusks6 years ago in Chinleana

-

Posted: July 21, 2018 at 03:03PM6 years ago in Field Notes

-

Why doesn't all the GTA get taken up?7 years ago in RRResearch

-

-

Harnessing innate immunity to cure HIV8 years ago in Rule of 6ix

-

-

-

-

-

-

post doc job opportunity on ribosome biochemistry!10 years ago in Protein Evolution and Other Musings

-

Blogging Microbes- Communicating Microbiology to Netizens10 years ago in Memoirs of a Defective Brain

-

Re-Blog: June Was 6th Warmest Globally10 years ago in The View from a Microbiologist

-

-

-

The Lure of the Obscure? Guest Post by Frank Stahl13 years ago in Sex, Genes & Evolution

-

-

Lab Rat Moving House13 years ago in Life of a Lab Rat

-

Goodbye FoS, thanks for all the laughs14 years ago in Disease Prone

-

-

Slideshow of NASA's Stardust-NExT Mission Comet Tempel 1 Flyby14 years ago in The Large Picture Blog

-

in The Biology Files

Not your typical science blog, but an 'open science' research blog. Watch me fumbling my way towards understanding how and why bacteria take up DNA, and getting distracted by other cool questions.

Two steps forward, one step back?

Advance #1: Realizing that I can use a log scale for the x-axis to see if runs have really reached equilibrium.

Retreat (the opposite of advance?) #1: Realizing that runs I had thought were at equilibrium aren't, so that conclusions I had thought were solid are not, so I don't really know what the true results are!

Advance #2: Realizing that I should think of/write about the elevated fragment mutation rates as degrees of divergence.

Advance #3: Remembered that the Introduction says that the goal of the work is to evaluate the drive hypothesis as a suitable null hypothesis for explaining uptake sequence evolution. Our results show that it is; the accumulation of uptake sequences under our model is strong, robust, and has properties resembling those of real uptake sequences.

Progress: Going through the Results section, annotating each paragraph with a summary of the all of the relevant data, annotated by whether this is solid equilibrium data (fit to use) or not. This is taking a lot of time, because I have to identify and check out (and sometimes enter and graph) the data, but the results aren't as bad as I had feared. Runs that have already finished fill some of the new-found gaps, and others are already running but won't finish for a few days (some longer). So I'm going to finish this annotation, queue whatever runs I think are still needed, and then maybe spend a few days on the optical tweezers prep work (at long last) while the runs finish.

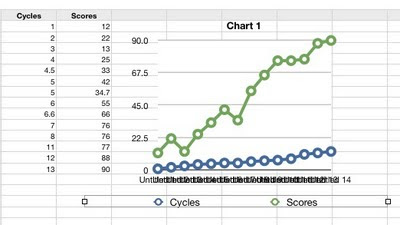

Two views of the same data

Here is some data from a series of runs where I simultaneously varied the lengths and numbers of the recombining fragments, so that the total amount of recombination remained the same (e.g. 1000 fragments 100 bp long or 50 fragments 2 kb long). I concluded that the runs were close to equilibrium and that the runs that got their recombination with the shortest fragments reached the highest equilibrium score.

Here is some data from a series of runs where I simultaneously varied the lengths and numbers of the recombining fragments, so that the total amount of recombination remained the same (e.g. 1000 fragments 100 bp long or 50 fragments 2 kb long). I concluded that the runs were close to equilibrium and that the runs that got their recombination with the shortest fragments reached the highest equilibrium score. But wait! This is the same data, now with the X-axis on a log scale. Now I see something quite different - after the first few cycles, the scores of all the runs are going up at the same rate (same slope), and their rate of increase is very log-linear. None of the runs show any sign of approaching equilibrium (i.e. of leveling off).

But wait! This is the same data, now with the X-axis on a log scale. Now I see something quite different - after the first few cycles, the scores of all the runs are going up at the same rate (same slope), and their rate of increase is very log-linear. None of the runs show any sign of approaching equilibrium (i.e. of leveling off).I had said I would always do runs both from above (a seeded genome) and from below (a random-sequence genome) and take as equilibrium the score the up and down runs converged on. I didn't do that here, but I see I should have.

I don't know whether I've done any runs that went long enough that the score obviously leveled off when plotted on a log scale. If not I should.

A clearer way to look at the mutation rates?

In the model itself the differences are handled as different mutation-rate settings that are applied to the same mutation-generating subroutine, and that's how I've been thinking of them. But I now think that referring to both types of changes (in the genome and in the fragment) as due to different mutation rates has created problems, causing me to feel that I have to justify using two different rates, and that the model would somehow be simpler or purer if the two rates were the same.

Effects of mutations

I suspect that the interactions between mutation and uptake sequence accumulation are more subtle than their independence would seem to suggest. So here are several questions that I think I can quickly answer with more runs:

1. Does changing the proportionality of µg and µf change the equilibrium? (I already know there's about a 2-fold equilibrium score difference between 100:1 and 100:100, but I want to test more proportions.)

2. Does changing fragment length but keeping the number of fragments recombined per cycle the same change the equilibrium?

3. Does changing the fragment length but keeping the amount of DNA recombined the same change the equilibrium?

4. When µf = µg, does changing the fragment length but keeping the number of fragments recombined per cycle the same have no effect? Maybe not, because long fragments are more likely to change other US?

Line breaks in Word 2008

Anyway, my clumsy solution was to chop the 200 kb input sequence into ten 20 kb segments, and evolve them all in parallel. Because Word is good with work counts, I opened the sequence file (as a text file) in Word and marked off every 20 kb with a couple of line breaks. Then I opened the file in Textedit and deleted everything except the last 20 kb to get a test file (no line breaks at all, that I could see). But it generated an 'unrecognized base' error message when I tried to use it, so my first suspicion was that Word had somehow generated a non-Unix line break.

Sure enough, opening the file in Komodo showed that it had. But surprisingly, the problem wasn't a Mac-style line break, but a DOS/Windows line break! Maybe Word 2008 thinks all .txt files are for Windows?

My coauthors cut the Gibbs analysis!

Time to think about doing an experiment!

One is the US variation manuscript. It isn't exactly on my plate yet, as my coauthors are still assembling their drastic revisions back into a coherent manuscript, but they say I should get it tomorrow. I'm hoping it's now in pretty good shape, with my job now just to polish the writing and the figures so we can get it submitted within a few weeks.

The other is beginning the groundwork for the optical tweezers experiments. Let's see what I can remember about what needs to be done. In general, I'm going to use Bacillus subtilis cells as my positive control, because they're bigger and tougher than H. influenzae. They're also naturally competent, and were successfully used for an optical tweezers study of DNA uptake in 2004.

1. I need to be able to stick cells onto glass coverslips without killing them or otherwise preventing them from taking up DNA. I'll start with Bacillus subtilis, and with poly-L-lysine coated cover slips. I'll need to make these myself - I have several protocols and several offers of help, but don't know if any of these people will be around over the holidays. The alternative to poly-L-lysine is a silane solution (fancy name) that was used for the B. subtilis experiments. But I don't have a protocol for using this, so it's a bit of a crapshoot. Some of the poly-L-lysine protocols say to pre-clean the coverslips with strong acid (nitric? chromic?) - a researcher down the hall said he might have some (old-tyme labs are good to have around).

2. I need to attach streptavidin to polystyrene beads. I have a protocol, and the streptavidin, and the coupling reagent, and the ready-for-coupling beads (they may be a bit small, 1 µ rather than 2 µ, but they'll do). What I don't have is a good way to test how well the coupling has worked (see below).

3. I need some biotin-conjugated DNA (H. influenzae chromosomal DNA). The research associate made some a while back for a different experiment, but I don't know if there's any left, or where it would be. I could make my own, if I can find the biotin.

4. I need to make the B. subtilis competent. This means that I need to make up the appropriate culture medium and competence-inducing medium (2 kinds, as I recall), and the appropriate agar plates for selecting transformants (so I can test whether they really are competent).

5. Once I have the streptavidin-coated beads and the biotin-coupled DNA, and some competent cells, I can test whether cells will stick to beads that have been incubated with DNA but not to beads without DNA or to DNase-treated beads. If this works I will know that there's streptavidin on the beads and biotin on the DNA and the cells are competent. If it doesn't I'll only know that at least one thing isn't right.

6. At this stage I can also test whether the cells I've stuck onto a coverslip can still bind DNA, by giving them the beads-plus-DNA and seeing if the beads stick to the cells (with the same controls as in step 4). Oh, but first I have to make sure that the competent cells will also stick to the coverslips.

7. Then I can make some competent H. influenzae and try steps 5 and 6 with them. Assuming I've been able to stick the H. influenzae cells onto coverslips).

8. After all this is working, I'll be ready to go back to the physics lab and try to measure some forces!

A draft NIH proposal

Manuscript progress

We have a plan (again, but it's a new plan)

Aim I. Characterizing the genetic consequences of transformation:

A. How do transformant genomes differ from the recipient genome? We want to know the number and length distributions of recombination tracts. This will be answered by sequencing a number of recombinant genomes (20? more?), preferably using multiplexing. We have preliminary data (analysis of four) showing 3% recombination.Aim 2. Characterizing the genetic differences that cause strain-to-strain variation in transformability: (The results of Part A will guide design of these experiments.)

B. How much do recombination frequencies vary across the genome? This will be measured by sequencing a large pool of recombinant genomes. The sensitivity of this analysis will be compromised by various factors - some we can control, some we can't.

C. Are these properties consistent across different strains? We should do 2 or more transformants of 86-028NP and of a couple of other transformable strains.

D. How mutagenic is transformation for recombined sequences? For non-recombined sequences? Is mutagenicity eliminated in a mismatch repair mutant? If not, is it due to events during uptake or translocation?

A. What loci cause strain 86-028NP to be ~1000-fold less transformable than strain Rd? (Are any of these loci not in the CRP-S regulon?) We will identify these by sequencing Rd recombinants (individually and/or in one or more pools) pre-selected for reduced transformability.In the Approach section we'll explain how we will accomplish these aims, and why we have chosen these methods. In the Significance and Innovation sections we'll need to convince the reader that these aims will make a big difference to our understanding of bacterial variation and evolution.

B. What is the effect of each 86-028NP allele on transformability of Rd, and of the corresponding Rd allele on 86-028NP? Are the effects additive? Do some affect uptake and others affect recombination?

C. Are transformation differences in other strains due to the same or different loci? This can be a repeat of the analysis done in Aim 2A. Does each strain have a single primary defect?

D. How have these alleles evolved? Have they been transferred from other strains? Do defective alleles have multiple mutations, suggesting they are old?

Bacillus subtilis

My turn to do lab meeting, yet again!

a) millions of chromosomal DNA fragments and degenerate uptake sequences taken up by competent cells,

b) chromosomal DNA fragments taken into the the cytoplasm of competent cells,

c) chromosomal DNA recombined by a non-RecA recombinase,

d) DNA of millions of transformants of a strain unable to do mismatch repair.Aim 3. Map loci responsible for transformation differences between two strains.

Keyboard rehab

I wondered if the death of my Apple aluminum keyboard might be not a direct consequence of the tea I spilled in it, but rather due to starchy goo created when the tea contacted the millions of nanoparticle-sized cracker crumbs that had probably slipped through the narrow gaps surrounding the keys over the last two years.

So I again took the batteries out, and washed it for a long time under warm running water, massaging the keys to loosen any crud stuck under them. Then I dried it over night in the 37°C incubator (the one with a fan that circulates warm dry air).

And voila - it works fine again!

Grant writing

Bacteria moving on surfaces

An aside: For similar reasons we usually use broth cultures in exponential growth, because we expect the physiology of such cells to be independent of the culture density. Once the density gets high enough to affect cell growth, the culture will no longer be growing exponentially, and minor differences in cell density can cause big differences in cell physiology. Unfortunately many microbiologists are very cavalier in their interpretation of 'exponential', and consider any culture whose growth hasn't obviously slowed as still being in log phase.

The usual lab alternative is to grow then on the surfaces of medium solidified with agar. This is quite convenient, as most kinds of bacteria can't move across the usual 1.5% agar, so isolated cells grow into colonies. The density of cells on an agar surface can get very high (a stiff paste of bacteria), because the cells are being fed from below by nutrients diffusing up through the agar.

Depending on the agar concentration, the film of liquid on the surface of the agar may be thick enough (?) to allow bacteria that have flagella to swim along the surface. Because the bacteria often move side-by-side in large groups this behaviour is called 'swarming'. Often swarming on agar surfaces is facilitated by surfactants that the bacteria produce, which reduce the surface tension of the aqueous layer. I've always assumed that bacteria living in soil and on other surfaces produce such surfactants as a way of getting surface-adsorbed nutrients into solution (that's how the surfactants we use in soaps and detergents do their job), but maybe surfactants are also advantageous for moving across surfaces with air-water interfaces, such as damp soil. The side-by-side cell orientation and movement may also be a consequence of surface-tension effects, as illustrated in this sketch.

One commonly observed effect of high density growth on agar is less sensitivity to antibiotics. We and many others have noticed that we need higher antibiotic concentrations on agar plates than in broth cultures (or vice versa, that antibiotic-resistant bacteria die if we grow them in broth at the same antibiotic concentration we used on agar plates). We also directly see density effects in our transformation assays - if we put a high density of antibiotic sensitive cells on a plate, we often see more 'background' growth of the sensitive cells. (Sometimes we see the opposite - resistant cells can't form colonies when they're surrounded by too many dying sensitive cells.)

But why would more dense bacteria be more resistant to an antibiotic? One possibility is that the individual cells aren't more resistant, but because more cells are present, more cells get lucky. If this were true we'd expect the number of cells in the 'background' to be directly proportional to the number of cells plated. A more common interpretation is that the presence of other cells somehow protects cells from the antibiotic. We know that resistant cells can protect sensitive cells from some antibiotics, if the mode of resistance is inactivation of the antibiotic. This is especially powerful if the resistant bacteria secrete an enzyme that inactivates the antibiotic, as is the case with ampicillin. This effect occurs both in broth and on agar plates.

But can sensitive cells protect other sensitive cells? Might dying cells somehow sop up antibiotic, reducing the concentration their neighbours are exposed to? Might an underlying layer of sensitive cells protect the cells above them from antibiotic?

The big problem I see is that bacteria are so small that concentrations will very rapidly equilibrate across them by diffusion. The agar in the plate is about 500 µ thick, and the cells are only about 1 µ thick, so there should be far more antibiotic molecules in the medium than the sensitive cells can bind**. Thus I don't see how a layer of sensitive bacteria could use up enough of the antibiotic to significantly reduce the effective concentration for the cells above. Even if the cell membrane is a barrier to diffusion of the antibiotic, there's going to be enough fluid around the cells for the antibiotic to diffuse through.

** But I haven't done the math. OK, back of the envelope calculation puts the number of molecules of antibiotic at about 10^15/ml (assume a m.w. of 5 x 10^2 and a concentration of 5 µg/ml). The density of cells on top of the agar might be 10^12/ml. If each cell were to bind 1000 molecules of antibiotic (that seems a lot, but maybe it's not), they would together bind up all the antibiotic from an agar layer equivalent to the thickness of the cell layer. But the thickness of even a very thick layer of cells is no more than a few % of the thickness of the agar, so the overall antibiotic concentration would only decrease by a few %.

eyboard kaput

uckily ondon rugs has new ones on sale for 69, so 'll try to get one tonight.

Choosing a topic for a NIH proposal

"Your ultimate task is to judge the likelihood that the proposed research will have an impact on advancing our understanding of the nature and behavior of living systems and the application of that knowledge to extend healthy life and reduce the burdens of illness and disability. Thus, the first assessment should be “Is it worthwhile to carry out the proposed study?”"This is excellent advice, and I'm going through the draft we have now, identifying places where we can point to impacts in various fields (pathogenesis, recombination, evolution).

But I have a harder time relating to another part of the advice, on how to choose a topic to propose research on. It appears to be directed at people who know they want to get a research grant but don't really care what topic they'll be researching. "I know how to do A, B and C, so I'll propose to find out D."

PHS 398 gestalt

- Use the 'Significance' section to build the reader's interest in the problem(s) our Specific Aims address.

- Use the 'Innovation' section to build the reader's interest in the methods we will use to achieve our aims.

- Use the 'Approach' section to convince the reader that we can accomplish our Aims.

- Use the initial 'Specific Aims' page to summarize all of the above, both as an introduction for the few readers who will read the rest of the proposal and as a stand-alone summary for everyone else.

A paradigm shift for how I organize grant proposals?

Specific Aims

This comes first; it has a one-page limit. I think it must also serve as a Summary page, because I can't find any mention of a separate Summary in the Instructions.

- State concisely the goals of the proposed research and summarize the expected outcome(s), including the impact that the results of the proposed research will exert on the research field(s) involved.

- List succinctly the specific objectives of the research proposed, e.g., to test a stated hypothesis, create a novel design, solve a specific problem, challenge an existing paradigm or clinical practice, address a critical barrier to progress in the field, or develop new technology.

(a) Significance

- Explain the importance of the problem or critical barrier to progress in the field that the proposed project addresses.

- Explain how the proposed project will improve scientific knowledge, technical capability, and/or clinical practice in one or more broad fields.

- Describe how the concepts, methods, technologies, treatments, services, or preventative interventions that drive this field will be changed if the proposed aims are achieved.

(b) Innovation

- Explain how the application challenges and seeks to shift current research or clinical practice paradigms.

- Describe any novel theoretical concepts, approaches or methodologies, instrumentation or intervention(s) to be developed or used, and any advantage over existing methodologies, instrumentation or intervention(s).

- Explain any refinements, improvements, or new applications of theoretical concepts, approaches or methodologies, instrumentation or interventions.

C. Approach

- Describe the overall strategy, methodology, and analyses to be used to accomplish the specific aims of the project. Include how the data will be collected, analyzed, and interpreted as well as any resource sharing plans as appropriate.

- Discuss potential problems, alternative strategies, and benchmarks for success anticipated to achieve the aims.

- If the project is in the early stages of development, describe any strategy to establish feasibility, and address the management of any high risk aspects of the proposed work.

- Discuss the PD/PI's preliminary studies, data, and/or experience pertinent to this application.

I promised myself that I'd have a rough draft by the end of November, so I'd better get busy converting my outline into paragraphs.

Improved trait mapping?

First a paragraph of background. Different strains of H. influenzae differ dramatically in how well they can take up DNA and recombine it into their chromosome (their 'transformability'). Transformation frequencies range from 10^-2 to less than 10^-8. We think that finding out why will help us understand the role of DNA uptake and transformation in H. influenzae biology, and how natural selection acts on these phenotypes. Many other kinds of bacteria show similar strain-to-strain variation in transformability, so this understanding will probably apply to all transformation. The first step is identifying the genetic differences responsible for the poor transformability, but that's not so easy to do, especially if there's more than one difference in any one strain.

Step 1: The first step we planned is to incubate competent cells of the highly transformable lab strain with DNA from the other strain we're using, which transforms 1000-10000 times more poorly. We can either just pool all the cells from the experiment, or first enrich the pool for competent cells by selecting those that have acquired an antibiotic resistance allele from that DNA. We expect the poor-transformability allele or alleles from the donor cells (call them tfo- alleles) to be present in a small fraction (maybe 2%?) of the cells in this pool.

Step 2: The original plan was to then make the pooled cells competent again, and transform them with a purified DNA fragment carrying a second antibiotic resistance allele. The cells that had acquired tfo- alleles would be underrepresented among (or even absent from) the new transformants, and, when we did mega-sequencing of the DNA from these pooled second transformants, the responsible alleles would be similarly underrepresented or absent.

The problem with this plan is that it's not very sensitive. Unless we're quite lucky, detecting that specific alleles (or short segments centered on these alleles) are significantly underrepresented in the sequence will probably be quite difficult. The analysis would be much stronger if we could enrich for the alleles we want to identify, rather than depleting them. The two alternatives described below would do this.

The problem with this plan is that it's not very sensitive. Unless we're quite lucky, detecting that specific alleles (or short segments centered on these alleles) are significantly underrepresented in the sequence will probably be quite difficult. The analysis would be much stronger if we could enrich for the alleles we want to identify, rather than depleting them. The two alternatives described below would do this.Step 2*: First, instead of selecting in Step 2 for cells that can transform well, we might be able to screen individual colonies from Step 1 and pool those that transform badly. We have a way to do this - a single colony is sucked up into a pipette tip, briefly resuspended in medium containing antibiotic-resistant DNA, and then put on an antibiotic agar plate. Lab-strain colonies that transform normally usually give a small number of colonies, and those that transform poorly don't give any. Pooling all the colonies that give no transformants (or all the colonies that fall below some other cutoff) should dramatically enrich for the tfo- alleles, and greatly increase the sensitivity of the sequencing analysis. Instead of looking for alleles whose recombination frequency is lower than expected, we'll be looking for spikes, and we can increase the height of the spikes by increasing the stringency of our cutoff.

The difficulty with this approach will be getting a high enough stringency for the cutoff. We don't want to do the work of carefully identifying the tfo- cells, we just want to enrich for them. In principle the numbers of colonies can be optimized by varying the DNA concentration and the number of cells plated, but these tests can be fussy because the transformation frequencies of colonies on plates are hard to control.

The difficulty with this approach will be getting a high enough stringency for the cutoff. We don't want to do the work of carefully identifying the tfo- cells, we just want to enrich for them. In principle the numbers of colonies can be optimized by varying the DNA concentration and the number of cells plated, but these tests can be fussy because the transformation frequencies of colonies on plates are hard to control.Step 1* (the RA's suggestion): Instead of transforming the lab strain with the poorly-transforming strain in Step 1, we could do the reverse, using DNA from the lab strain and competent cells from the poorly transformable strain. Step 2 would be unchanged; we would make the pooled transformants competent and transform them with a second antibiotic-resistance marker, selecting directly for cells that have acquired this marker. This would give us a pool of cells that have acquired the alleles that make the lab strain much more transformable, and again we would identify these as spikes in the recombination frequency.

The biggest problem with this approach is that we would need to transform the poorly transformable strain. We know we can do this (it's not non-transformable), but we'd need to think carefully about the efficiency of the transformation and the confounding effect of false positives. If we include the initial selection described in Step 1, we could probably get a high enough frequency of tfo+ cells in the pool we use for step 2.

The biggest problem with this approach is that we would need to transform the poorly transformable strain. We know we can do this (it's not non-transformable), but we'd need to think carefully about the efficiency of the transformation and the confounding effect of false positives. If we include the initial selection described in Step 1, we could probably get a high enough frequency of tfo+ cells in the pool we use for step 2.The other problem with this approach is that we'd need to first make the inverse recombination map (the 'inverse recombinome'?) for transformation of lab-strain DNA into the tfo- strain. This would take lots of sequencing, so it might be something we'd plan to defer until sequencing gets even cheaper.

I think we may want to present all of these approaches as alternatives, because we're proposing proof-of-concept work rather than the final answer. The first two are simpler and will work even on (best on?) strains that do not transform at all. The last will work very well on strains that do transform at a low frequency..

Still not done

One of the runs I thought to have hung has finished, and it gives me enough data to fix the graph I needed it for. But I'm not going to do any more work on this until my co-authors have ahd a chance to consider whether we want to include this figure (I like it).

Getting the US variation manuscript off my hands

Below I'm going to try to summarize the new data (new simulation runs) I've generated. Right now I can't even remember what the runs were for, and I haven't properly analyzed any of them.

A. One pair of runs were two runs with 10 kb genomes that were intended to split the load of a 20 kb genome run that had stalled (needed only as one datapoint on a graph). That run had used a very low mutation rate and I was trying to run it for a million cycles, but it had stalled after 1.87x10^5 cycles. Well, it kept running, but not posting any more data so eventually I aborted it. Splitting it into two 10 kb runds didn't help - both hung after 1.87 x 10^5 cycles. Now I've made two changes. First, I've modified the 'PRINT' commands so that updates to the output file won't be stuck in the cluster's buffer; this may be why updates to the output files were so infrequent (sometimes not for weeks!). Second, I've set these runs to go for only 150,000 cycles and to report the genome sequences when they finish. This will let me use their output sequences as inputs for new runs.

B. Another pair of runs were duplicates of previous runs used to illustrate the equilibrium. One run started with a random-sequence genome and got better, the other started with a genome seeded with many perfect uptake sequences and got worse. They converge on the same final score, as seen in the figure below.

C. And one run was to correct a mistake I'd made in a 5000 cycle run that used the Neisseria meningitidis DUS matrix to specify its uptake bias. I should have set the mutation parameters and the random sequence it started with to have a base composition of 0.51 G+C, but absentmindedly used the H. influenzae value of 0.38. I needed the sequence that this run would produce, because I wanted to use the sequence outputs of it and its H. influenzae USS matrix equivalent as inputs for another 5000 cycles of evolution. I got the sequence from the first run, and started the second pair of runs, but unfortunately the computer cluster I'm using suffered a hiccup and those runs aborted. So I'll queue them again right now. (Pause while I re-queue them...)

D. Then there were four runs that used tiny fragments - enough 50, 25 and 10 bp fragments to cover 50% of the 200 kb genome. Because the length of the recombining fragments sets the minimum spacing of uptake sequences in equilibrium genomes, we expect runs using shorter fragments to give higher scores. But because the fragment mutation rate is 100-fold higher than the genomic rate in our simulations, most of the unselected mutations in our simulated genomes come in by recombination, in the sequences flanking uptake sequences. This means that genomes that recombine 10 bp fragments get few mutations outside of their uptake sequences, so I also ran the 10 bp simulation with a 10-fold higher mutation rate. These runs haven't finished yet - in fact, most of them have hardly begun after 24 hrs. I think I'd better set up new versions that use the bias-reduction feature, and then run the outputs of these in runs with unrelenting bias. (Pause again...)

The rest of the new runs were to fill in an important gap in what we'd done. The last paragraph of the Introduction promised that we would find out what conditions were necessary for molecular drive to cause accumulation of uptake sequences. But we hadn't really done that - i.e. we hadn't made an effort to pin down conditions where uptake sequences don't accumulate. Instead we'd just illustrated all the conditions where they do.

E. So one series of runs tested the effects of using lower recombination cutoffs (used with the additive versions of the matrix) when the matrix was relatively weak. I had data showing that uptake sequences didn't accumulate if the cutoff was less than 0.5, but only for the strong version of the matrix. Now I know that the same is true for the weak version.

F. Another series tested very small amounts of recombination. The lowest recombination I'd tested in the runs I had already done was 0.5% of the genome recombined each cycle, which seemed like a sensible limit as this is only one 100 bp fragment in a 20 kb genome. But this still gave substantial accumulation of uptake sequences, so now I've tested one 100 bp fragment in 50 kb, 100 kb and 200 kb genomes. I was initially surprised that the scores weren't lower, but then remembered that these scores were for the whole genome, and needed to be corrected for the longer lengths. And now I've also remembered that these analyses need to started with seeded sequences as well as random sequences, because this is the rigorous way we're identifying equilibria. (Another pause while I set up these runs and queue them...)

G. The final set of runs looked at what happens when a single large fragment (2, 5 or 10 kb) recombines into a 200 kb genome each cycle. Because there would otherwise be little mutation at positions away from uptake sequences, these runs also had a 10-fold elevated genomic mutation rate. The output genome sequences do have more uptake sequences than the initial random sequences, but the effect is quite small, and the scores for these runs were not significantly different than those for the runs described in the paragraph above, where the fragments were only 100 bp. This is expected (not that I think it through) because the only difference between the runs is that this set's fragments bring in 2-10 kb of random mutations in the DNA flanking the uptake sequence.

(I was going to add some more figures, but...)

Too many figures!

But where's the microscope?

This is the optical tweezers setup I'll initially be working with. The microscope slide chamber is clamped to the light-coloured micromanipulator controls at the center, with a water-immersion objective lens on its right side and a light condenser on its left side. The little black and yellow tube at the back left is the infrared laser, and the tall silver strip beside it holds the photodetector that detects the laser light after it is bounced through mirrors and lenses, the slide chamber and condenser, and another mirror. The visible light source is out of view on the left, and the rightmost black thing is the visible-light camera which lets you see what you're doing, via the grey cable that connects it to a computer screen. Lying on the table in front of the camera is a slide chamber, left by one of the biophysics students who've been using this apparatus.

Preparing for optical tweezers work

Faculty position cover letter Do's and Don't's

Do: Explain what distinguishes your work on regulatory protein-of-the-month from everyone else's.

Do: Recheck the final text just before you submit it.

Don't: Say that you're applying because you really want to live here.

Don't: Say that you only found out about the position because your buddy showed you the ad.

Don't: List generic research 'skills' such as gel electrophoresis, cell culture, and Microsoft Excel.

Don't: Say that you're the ideal candidate.

Don't: Say that you will happily apply your specialized techniques to any research question (i.e. you don't care about the science, you just want to play with your toys).

Don't: Give a full history of every project you've ever worked on.

Don't: Fill four pages.

Don't: Talk about your passion for research (or any other feelings).

Don't: Say you're hardworking.

Dont: Delay publishing a first-author paper on your post-doc work until you're about to apply for faculty positions.

Blue screen logouts in Excel - Numbers is not the answer.

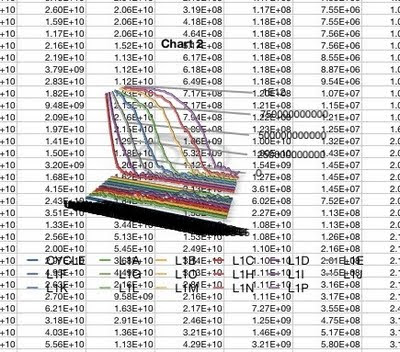

I've got a lot of data to analyze from all the simulations I've run, so I'm going to try using the Mac Numbers app instead. Whoa, doesn't look good. Here's what the default for a line graph produced:

Weird? Let me count the ways.

Weird? Let me count the ways.- The background is transparent.

- This is a 3-D graph.

- The lines are 3-d, like ropes.

- The lines have 3-D shadows.

- There are no visible axes.

- There is no scale.

- There are some numbers connected to some of the lines.

- The legend at the bottom seems to treat the X-axis values as Y-axis values.

Ah, the problems with the previous graph were partly that I had accidentally chosen 3-D. But my new graph is terrible too.

- The symbols are enormous and I can't find any way to change them or remove them.

- My X-axis values are being treated as Y-axis values - I don't know what it's using for the X-axis as the numbers are illegibly jammed together.

- My column headings (Cycles, Scores) are ignored.

Aha, changing to a scatter plot got it to use my Cycles data for the X-axis values. But now the lines connecting the points have disappeared and I see no way to get them back. And the new legend says that the Xs represent cycles, when they're really Scores. Instead moving the Cycles data over into the grey first column gave a semi-presentable line graph, but now it's not treating the Cycles values as numbers but as textual labels, even though I discovered how to tell it that they are numbers.

I'm afraid Numbers appears to be just a toy app, suitable for children's school projects but not for serious work. I would RTFM but I can't find anything sensible.

Uptake sequence stability

New! Improved! The NIH plan!

- Uptake bias (across the outer membrane)

- Translocation biases (across the inner membrane)

- Cytoplasmic biases (nucleases and protection)

- Strand-exchange biases (RecA-dependence)

- Mismatch repair biases

Now, where was I?

Genome BC's submission software...

Genome BC proposl angst

I'm alternating between thinking that our various drafts are written very bacly (so we should just abandon the proposal) and remembering that the science we propose is really dazzling. Back and forth and back and forth...

The horror!

- Signature page

- Participating Organizations Signatures ( ≠ signature page)

- Co-applicants

- Lay summary

- Summary

- Proposal (5 pages, plus 5 for references, figures etc.)

- Gantt chart (to be included with figures)

- SWOT analysis matrix and explanatory statement

- Strategic Outcomes (???)

- Project team

- Budget (Excel spreadsheet provided)

- Co-funding strategy

- Budget justification

- Documents supporting budget justification

- Suporting documents for co-funding

- List of researchers on the 'team' (just me and the post-doc?)

- Researcher profile for me

- Researcher profile for the post-doc

- List of collaborators and support

- Publications (we can attach five relevant pdfs)

- Certification forms form

- Biohazard certification form (we're supposed to get one especially for this proposal, but only if the project is approved)

limits set by sequencing errors

(*The limit becomes the square of the error rate, about 4 x 10^-6.)

Background for our Genome BC proposal

In this analysis we'll be directly detecting a combination of (1) the transformation-specific biases of DNA uptake and translocation and (2) the non-specific biases of cytoplasmic nucleases, the RecA (Rec-1) strand annealing machinery, and mismatch repair. We'll be able to subtract the former from the latter, using information we'll get from the uptake and translocation parts of the NIH proposal (these are not in the Genome BC proposal).

RecA and its homologs provide the primary mechanism of homologous recombination in all cellular organisms. This recombination generates most of the new variation that's the raw material for natural selection (acting on mutations - the ultimate source of variation). Recombination is also a very important form of DNA repair; bacterial mutants lacking RecA are about 1000-fold more sensitive to UV radiation and other DNA-damaging agents.

We know almost nothing about the sequence biases of RecA-mediated recombination. Such biases are likely to exist (all proteins that interact with DNA have sequence biases), and they are likely to have very significant long term consequences for genome evolution. The better-characterized biases of DNA repair processes are known to have had big effects on genomes; for example, the dramatic differences in base composition between different species are now though to be almost entirely due to the cumulative effects of minor mutational biases compounded over billions of years of evolution.

RecA promotes recombination by first binding to single-stranded DNA, coating the strand with protein. I don't know whether the DNA is entirely buried within the protein-DNA filament, or whether the RecA molecules leave the DNA partly exposed. (Better find out!) The filament is then able to 'invade' double stranded DNA, separating the strands (?) and moving until it finds a position where the DNA in the filament can form base pairs with one of the strands.

(Break while I quickly read a book chapter about this by Chantal Prevost.)

OK, the ssDNA has its backbone coated with RecA, but its bases are exposed to the aqueous environment and free to interact with external ('incoming') dsDNA. The dsDNA is stretched by its interactions with the RecA-ssDNA filament (by about 40% of its B-form length); this may also open its base pairs for interaction with the based of the ssDNA. But the pairs might not open, and the exposed bases of the ssDNA would instead interact with the sides of the base pairs via the major or minor groove in the double helix. A hybrid model (favoured by Prevost) has the exposed bases pairing with separated As and Ts of the dsDNA, but recognizing the still-intact G-C base pairs from one side. Prevost favours a model in which base pairing interactions between the stretched dsDNA and the ssDNA then form a triple helix (the stretching opens up the double helix, making room for the third strand), which is then converted to a conventional triple helix before the products separate.

With respect to our goal of characterizing the sequence bias over the whole genome, she says:

"Taken together, the available information on sequence recognition does not allow one to ratio the sequence effects on strand exchange or to extract predictive rules for recognition."So there's need for our analysis.

Contact but not yet two-way communication

What's my 'dream research question'?

The plan is to have a new kind of brainstorming session on Sunday morning, with faculty or senior post-docs taking 3-5 minutes to describe a 'dream research question' that they would love to answer if there were no constraints on time, money or personnel. The rest of the attendees will be assigned to teams for each question (randomly with respect to expertise), and will have an hour to come up with a research program. Then each group will present their program (in a few minutes) and we'll all vote on who gets the prize.

So what should my dream question be? I'm leaning towards wanting to understand the organism-level ecology of a single bacterium. What features matter to it, minute to minute and day to day? What is available, and what is limiting? The difficulty here is scale - we are so big that we can't easily imagine what life is like at this scale. See for example Life at Low Reynolds Number by E. M.Purcell (this link is to a PDF). One problem in using this question for this session is that it isn't a single question but a whole world of ecology. Another is that I suspect what's needed is miniaturization skills, and none of us are likely to know anything about that.

Maybe we could do a "How has selection acted?" question. I would want to take the participants away from the more common "How might selection have acted?" and "How can selection act?" questions to focus on identifying what the real selective forces were.

Or maybe "What was the genome of the last universal ancestor?" The problem with this question is that it is probably not answerable at all, regardless of how much time or money or people are thrown at it.

I think I'd better keep thinking...

------------------------------------------------

I also signed up to do a poster about uptake sequence evolution. I'm a glutton for work.

Are we strategic yet?

- A Swot analysis (should it be SWOT?): This is a matrix whose four boxes describe the Strengths, Weaknesses, Opportunities and Threats (S W O T, get it?) associated with our proposal. Strengths and weaknesses are 'internal' factors, and Opportunities and Threats are external. I Googled this, and Wikipedia says that these are to be defined in the light of the goal of the work.

- A GANTT chart (pause while I Google this too): This appears to be a bar chart showing the how the different stages of the proposed work will overlap. Unlike SWOT analyses, this should be Gantt, not GANTT, as this is the name of the person who first popularized such charts, about 100 years ago. Here's one from Wikipedia.

- Up to three pages of 'Co-Funding Strategy' (plus an Appendix with no page limit): These Genome BC grants require matching funds (see this post). The proposal forms provide a table to list details of the source or sources of this funding, with space under each to explain how the matching funds will directly support the objectives of the project. Oh dear, we're supposed to have a letter from the agency agreeing to our use of their funds to match Genome BC's grant... I think I'd better call Genome BC in the morning.

- A page of 'strategic outcomes', also explaining why these are of 'strategic importance' to British Columbia: I only recently realized that people distinguish between strategy and tactics, so I asked my colleagues what strategic might mean in this context (Google wasn't much help). Several didn't know any more than me, one recommended looking up the goals of the funding agency (sensible in any case), and one said he thought this just meant explaining the outcomes in the larger context of our long-term research goals.