This is a computer-simulation model project I made some progress on last summer. Now I and two post-docs are going to improve and extend it.

The goal is to simulate how uptake signal sequences (USSs, see two previous posts) could accumulate in genomes due to a bias in the competence machinery that brings extracellular DNA into the cell. We will then compare the predictions generated from different sets of assumptions with the actual distribution and variation of USSs in real genomes.

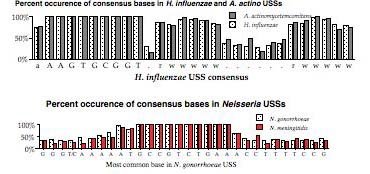

What real genomes can we compare with the model's predictions: The just-accepted USS paper examined USSs in eight related genomes, all from the family

Pasteurellaceae. More pasteurellacean genome sequences are becoming available, including several different isolates of

A. pleuropneumoniae and of

H. influenzae. The bacteria in the family

Neisseraceae also have USS in their genomes:

N. gonorrhoeae (1 sequence) and

N. meningitidis (2 sequences) for sure, and there might be USSs in other neisseriaceal genomes that I don't know about. So far we know of three different types of USS, the two described in the USS paper, and the one shared by the two

Neisseria species.

How and/or why do I think USS accumulate: Many people have assumed that USSs evolved in bacterial genomes to serve as species-specific tags. Bacteria that have USS also have DNA-uptake machinery that preferentially takes up DNA fragments containing their USS; this means that they preferentially take up their own DNA over DNAs from unrelated organisms. Because most people have assumed that bacteria take up DNA to get new versions of genes, or to get new genes, they assumed that bacteria evolved USSs so they wouldn't take up probably useless and possibly dangerous foreign DNAs.

I think these people have been misled by their ignorance of how evolution works (topic for another post) and that bacteria are really taking up DNA as food. Stated less teleologically, genes encoding DNA uptake machinery have been successful because bacteria that have them are able to efficiently use DNA from outside the cell as a source of nucleotides, by bringing the DNA into the cell and breaking it down. Sometimes this DNA recombines with the cell's own DNA, but I think that's just a side-effect of the DNA-repair machinery active in all cells. Bacteria that can't take up DNA can still get nucleotides from DNA outside the cell, but they have to do it by secreting nucleases that break down the DNA outside the cell, and then taking up any nucleotides that don't diffuse away. (As I just described it, DNA uptake seems to be much more efficient a way of getting the nucleotides, but really we don't know the details that would determine the relative costs.)

So, if DNA is just used as food, shouldn't any DNA be just as useful (nutritious) as any other? Why bother having USSs in your genome, if there's no need to exclude foreign DNA? In fact, having uptake machinery that prefers fragments with USSs may be costly, as it limits the cell's choices (makes the cells into 'picky eaters').

One simple explanation for USS and USS-preferring uptake is that the uptake specificity arises as a side-effect of the physical interactions between the machinery and the DNA. Most DNA-binding proteins have some degree of sequence preference (just as some handles fit our hands better than others), and a protein that needs to bind DNA tightly and force it across a membrane is likely to have stronger preferences than one that only loosely associates with DNA. A related factor is that the DNA probably must be bent or kinked to pass across the membrane, and some sequences bend and/or kink more easily than others.

This would explain why the DNA uptake machinery is biased. But why are there so many copies (1000-2000) of its preferred sequence in the genome, when there are only a few copies of similar but non-preferred sequences? I think the USSs accumulate in the genome by a kind of 'molecular drive', caused by the biased uptake system and by the occasional recombination with the cell's own DNA. This molecular drive is inevitable provided the cells sometimes take up DNA from other members of their own 'species' (don't worry about how we might define 'species' in bacteria), and provided this DNA sometimes replaces the corresponding part of the cells own DNA.

The computer model is (will be) designed to make this molecular drive explanation explicit, and to compare its predictions with what is seen in real genomes.

Modeling with Perl: I chose to write the model in the computer language Perl. I had never used Perl, but knew that it is the preferred language for bioinformatics work because of how easily it works with sequences. So I bought a book called Beginning Perl for Bioinformatics and starting writing code and debugging code. Eventually I advanced to running simulations and improving code and debugging the 'improved' code and waiting around for simulations to reach the elusive equilibrium... Then it all got pushed aside by more urgent work. And now we're starting up again.

What the current version of the model can simulate: It starts by creating a long DNA sequence; 200,000 bp was a typical size I used (= about 10% of the

H. influenzae genome). The base composition is specified by the user (38% G+C for

H. influenzae). It then 'evolves' the DNA sequence through many generations of mutation (rate specified by user, usually ≤0.0001 changes per base pair per generation) and a step simulating DNA uptake+recombination. In this step, any USS-length sequence that is only one mismatch away from the

H. influenzae USS core (AAGTGCGGT and ACCGCACTT) may be replaced by a perfect match to the USS. The probability that this happens is specified by the user (usually ≤0.1). In a more realistic version of the model, USS-length sequences that are two mismatches away can also be replaced, and both these and singly-mismatched sequences can be replaced by other singly-mismatched sequences.

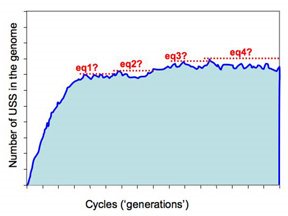

The consequence of repeating these two steps over many generations is that perfect USSs accumulate in the sequence. The program keeps track of the number and locations of these, and at specified intervals it reports the number of USS in the genome. At the specified end of the run it reports more details, including the evolved sequence of the simulated genome (it reported the starting sequence when it began the run).

Two more complex versions of the program do the following:

1. Find the equilibrium distribution of USSs: For any settings, an equilibrium should be reached when the loss of USSs by mutation is balanced by the creation of USSs by mutation and uptake+recombination,

i.e. when the effects of mutation and molecular drive are balanced. We want to know about this equilibrium for two reasons. First, we want to compare both equilibrium and non-equilibrium USS distributions to those of real genomes. Second, getting to true equilibrium takes MANY thousands of generations (sometimes maybe millions), and for most simulations we probably will only need to get 'reasonably close' to equilibrium. However we can't know what 'reasonably close' is until we've characterized the approach to the true equilibrium. The model does this, watching for the number of USSs in the genome to stabilize. But for some important conditions this was taking a very long time (at least several days on a lab computer); this is what I think we'll need WestGrid for (see below).

2. Track what happens when the specificity changes: We know that the USS specificities of the two subclades of

Pasteurellaceae have diverged (see USS paper abstract; sorry, I haven't figured out how to do internal links yet). If we can simulate how USS distributions change during such divergences, maybe we can make inferences about how the Pasteurellacean divergence happened. In particular,w e want to know if the new USSs appear in the places of the old USSs or in new places. In the present model, once a preset number of USSs have accumulated, the uptake+recombination step changes its perfect USS sequence from the

H. influenzae (Hin-type) USS to the

A. pleuropneumoniae (Apl-type) USS. Over many more generations, this change in the molecular drive causes the genome to lose its Hin-type USSs and accumulate Apl-type USSs.

The Hin-type and Apl-type USSs differ at three positions (AAGTGCGGT vs ACAAGCGGT). The switch in specificity can occur in one step, or in three steps, each changing one position, with the new genome accumulating the intermediate USSs before the next specificity change is made. I think there are one-step and three-step versions of the program.

At the end of each step, when the specified number of USSs have accumulated or the specified number of generations been simulated, the positions of these USSs are compared to the positions of the previous type USSs.

What I would like the improved model to simulate: - Full-length USSs. Not just the 9bp core but the flanking sequences too. This may be tricky because the flanking sequences appear to have a much looser consensus than the core.

- Effect of adjacent USSs on uptake+recombination. In most genomes most USSs are separated by only 1-2kb., and in the lab bacteria readily take up big DNA fragments containing several or many USSs. But we have no idea how these USSs might interact during uptake. Simulating ways that interacting USSs might affect uptake will affect how USSs are distributed along the simulated genome. We will then be able to compare these distributions to those of real genomes and make inferences about the real interactions.

- Effects of USSs on other functions of DNA. The present model treats the DNA as function-less, as if we were only simulating a junk-DNA component of the genome. But most real DNA in bacterial genomes codes for proteins, and this will place varying constraints on where USSs will be tolerated.

I also want to be able to run the improved model (at least one version of it) on the WestGrid system. This network gives us free access to a high-powered system that can run the computationally intensive programs; we'll probably need to use it to find some of the equilibria where the effects of mutation and molecular drive are balanced. The modifications needed for this are not very complicated, and this may be the first task we assign to the part-time student programming assistant we hope to hire.