The Results section of the Sxy manuscript-in-progress has been revised, and I'm waiting for results and figures from the grad students before doing more work on it.

The CRP-S manuscript has been provisionally accepted, with reviews that were both complimentary and useful. Over the next few days the grad student whose work it reports will make the minor corrections it requests and do the new analyses suggested by one especially helpful reviewer, and we'll compose a response for the Editor. Then we'll resubmit and wait for the acceptance email telling us where to send our $1900 payment of the journal's 'Open Access Charge'.

A paragraph (oops, it's turning into an essay) about such publication charges. Until 10-20 years ago, almost all research was published in print journals whose costs of publication were covered by subscription fees and often by advertising revenue. Because only specialists read most journals, the subscription fees were high for individuals (often well over $100 for 12 issues per year) and exorbitant for institutions such as university libraries. Some journals were published by non-profit professional societies, and some by profit-seeking publishers. This arrangement was bad for science, because articles were only available to people whose grants or institutional libraries could pay for a subscription.

Now, much research is published electronically as well as or instead of on paper, and most of it is available free online, either immediately or after a profit-allowing delay (often 6 or 12 months). This is good - research results are much more widely available - but it has reduced the incentive to pay for a subscription, so journal revenues are down. Journals have responded by requiring large financial contributions from the authors of the papers they publish.

This smells like payola, and my knee-jerk reaction to the $1900 charge is outrage, but in fact it's a move in the right direction. The costs of disseminating research should be treated as part of the costs of doing science, and it makes much more sense to pay them as publication charges ($ from research grants) than as subscription fees ($ from university libraries). The 'usual' way (pay for the privilege of reading the papers) was the wrong way. It was the standard only because, before the internet, we didn't have the technology to make our findings widely available. (Readers interested in this issue should check out the Public Library of Science.)

Now, where was I? Oh yes, progress... We're still waiting for the final decision on our revised and resubmitted USS-2006 manuscript, but we don't expect any problems. The Perl project needs attention (the WestGrid server has unexpectedly given our requested runs the cold shoulder), so I'll try to sit down with the post-docs today to sort out where the bugs are and what to do next. I'll also get back into my 'unbiased' search for USS-related motifs in the genome sequence. And maybe I'll get back to the bench for a change - a collaborating grad student needs me to run an assay for him.

- Home

- Angry by Choice

- Catalogue of Organisms

- Chinleana

- Doc Madhattan

- Games with Words

- Genomics, Medicine, and Pseudoscience

- History of Geology

- Moss Plants and More

- Pleiotropy

- Plektix

- RRResearch

- Skeptic Wonder

- The Culture of Chemistry

- The Curious Wavefunction

- The Phytophactor

- The View from a Microbiologist

- Variety of Life

Field of Science

-

-

Change of address7 months ago in Variety of Life

-

Change of address7 months ago in Catalogue of Organisms

-

-

Earth Day: Pogo and our responsibility10 months ago in Doc Madhattan

-

What I Read 202410 months ago in Angry by Choice

-

I've moved to Substack. Come join me there.1 year ago in Genomics, Medicine, and Pseudoscience

-

-

-

-

Histological Evidence of Trauma in Dicynodont Tusks7 years ago in Chinleana

-

Posted: July 21, 2018 at 03:03PM7 years ago in Field Notes

-

Why doesn't all the GTA get taken up?7 years ago in RRResearch

-

-

Harnessing innate immunity to cure HIV9 years ago in Rule of 6ix

-

-

-

-

-

-

post doc job opportunity on ribosome biochemistry!11 years ago in Protein Evolution and Other Musings

-

Blogging Microbes- Communicating Microbiology to Netizens11 years ago in Memoirs of a Defective Brain

-

Re-Blog: June Was 6th Warmest Globally11 years ago in The View from a Microbiologist

-

-

-

The Lure of the Obscure? Guest Post by Frank Stahl13 years ago in Sex, Genes & Evolution

-

-

Lab Rat Moving House14 years ago in Life of a Lab Rat

-

Goodbye FoS, thanks for all the laughs14 years ago in Disease Prone

-

-

Slideshow of NASA's Stardust-NExT Mission Comet Tempel 1 Flyby15 years ago in The Large Picture Blog

-

in The Biology Files

Not your typical science blog, but an 'open science' research blog. Watch me fumbling my way towards understanding how and why bacteria take up DNA, and getting distracted by other cool questions.

Representing results

One of the grad students and I continue to struggle with finding the best way to represent her results.

The results take the form of gel images - autoradiograms of positions of bands in the gel, representing the sizes of radioactive RNA fragments. But which kinds of bands convey significant information depends on the enzyme that's been used and on the pattern seen in the 'ladder' (unfolded RNA, the control) lane. But the images of the gels are too complex for the reader to make sense of without a lot of effort, and we present a typical one only to reassure the reader that the analysis was competently done. Thus we need to develop a representation that summarizes the gel results.

Sometimes the presence of a band in the 'structure' (folded RNA, the test) lane is significant information, telling us that the folding of the RNA lets the enzyme cut at a place that it couldn't in the unfolded RNA. Other times (different position or different enzyme) the presence of a band tells us nothing. Sometimes the absence of a band tells us that the folding makes that part of the RNA inaccessible, but sometimes it is just because that enzyme can't ever cut at that kind of position. To make matters worse, the gel results, though reasonably reproducible, are not always exactly the same, probably due to slight and unavoidable fluctuations in temperatures and volume measurements.

So we want our representation to convey both positive information and lack of information. One representation needs to say "These bases of the RNA have this cutting pattern with this enzyme, and these other bases have that pattern" and another needs to say "These bases of the RNA have this structure and these other bases have that pattern".

We're still working on it.

The results take the form of gel images - autoradiograms of positions of bands in the gel, representing the sizes of radioactive RNA fragments. But which kinds of bands convey significant information depends on the enzyme that's been used and on the pattern seen in the 'ladder' (unfolded RNA, the control) lane. But the images of the gels are too complex for the reader to make sense of without a lot of effort, and we present a typical one only to reassure the reader that the analysis was competently done. Thus we need to develop a representation that summarizes the gel results.

Sometimes the presence of a band in the 'structure' (folded RNA, the test) lane is significant information, telling us that the folding of the RNA lets the enzyme cut at a place that it couldn't in the unfolded RNA. Other times (different position or different enzyme) the presence of a band tells us nothing. Sometimes the absence of a band tells us that the folding makes that part of the RNA inaccessible, but sometimes it is just because that enzyme can't ever cut at that kind of position. To make matters worse, the gel results, though reasonably reproducible, are not always exactly the same, probably due to slight and unavoidable fluctuations in temperatures and volume measurements.

So we want our representation to convey both positive information and lack of information. One representation needs to say "These bases of the RNA have this cutting pattern with this enzyme, and these other bases have that pattern" and another needs to say "These bases of the RNA have this structure and these other bases have that pattern".

We're still working on it.

The Sxy paper

The grad students and I sat down yesterday to go over the Results section of our rough manuscript about how the cell regulates production of the Sxy protein. Sxy acts with the broad nutritional regulator CRP to induce the other competence genes, so understanding how Sxy is regulated is the most important part of understanding how competence is regulated. We spent most of our time working out the best order for presenting results in, and the best ways to display the results in figures.

The previous version had quite a bit of important data presented numerically in two tables. We've now decided to instead present this data as bar graphs. What matters about this data is not so much the particular transformation-frequency (TF) values we've measured for the different mutants and growth conditions as the relationships between the values (e.g. that cells in late- log growth have much higher TFs than cells in early-log growth, and that the sxy-1-5 mutants have much higher TFs than the wildtype cells). Presenting the data as graphs makes it much easier for the reader to make the important comparisons and see the patterns.

Our first priority now is to get the figures in at least a rough approximation of their final form. We think we have almost all the data we want for this paper. One last experiment - asking if the sxy mutations alter how cells respond to nucleotides (see old posts on Sxy and PurR regulation - sorry I haven't figured out how to link directly to them) - will be completed this week, if all goes well. And one gel (or pair of gels) will probably need to be rerun, to get the best resolution of the bands we want to display.

Yesterday I made "place-marker" versions of the figures we don't have yet, and renumbered all the figures to fit our new plan. Today I'm making the Results-reorganization changes we decided on.

The previous version had quite a bit of important data presented numerically in two tables. We've now decided to instead present this data as bar graphs. What matters about this data is not so much the particular transformation-frequency (TF) values we've measured for the different mutants and growth conditions as the relationships between the values (e.g. that cells in late- log growth have much higher TFs than cells in early-log growth, and that the sxy-1-5 mutants have much higher TFs than the wildtype cells). Presenting the data as graphs makes it much easier for the reader to make the important comparisons and see the patterns.

Our first priority now is to get the figures in at least a rough approximation of their final form. We think we have almost all the data we want for this paper. One last experiment - asking if the sxy mutations alter how cells respond to nucleotides (see old posts on Sxy and PurR regulation - sorry I haven't figured out how to link directly to them) - will be completed this week, if all goes well. And one gel (or pair of gels) will probably need to be rerun, to get the best resolution of the bands we want to display.

Yesterday I made "place-marker" versions of the figures we don't have yet, and renumbered all the figures to fit our new plan. Today I'm making the Results-reorganization changes we decided on.

Are we equilibrated yet?

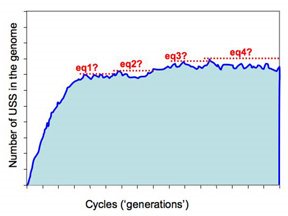

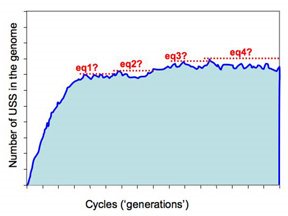

A post-doc and I need to decide the best way to tell when our USS simulations reach equilibrium. This is tricky.

We do expect that each run of the simulation will come to an equilibrium where the processes enriching the genome for USS are balanced by the processes removing USS from the genome. The difficulty comes in deciding how we will recognize that the simulation has reached this state. Because these are 'stochastic' simulations, the numbers of USS undergo a lot of random fluctuation even at equilibrium, and we need to decide when the long-term average has become sufficiently stable to qualify as 'equilibrium'. When the simulation first starts, the number of USSs increases quickly, but as it gets closer to equilibrium the rate of increase gets slower and slower.

How do we decide when to stop? As with many other things, how we decide to do this will depend on how long we're willing to wait, and on how accurate we want our answers to be.

Some versions of the simulations run fast; these are ones we've set to use small 'genomes' (e.g. 10,000bp) and high mutation rates and high uptake probabilities and (probably strong biases. We can easily let these run for many cycles beyond what looks like equilibrium, so that we're sure that the long-term average USS number is stable despite the short-term fluctuations. I think we should do lots of these, so we have a very clear expectation of what to expect.

But the more realistic versions of the simulations will run much slower, as they'll have larger genomes (maybe even as big as the H. influenzae genome, ~1,830,000bp) and lower mutation rates. These will be run remotely on the WestGrid system, and we need to build into them some criteria that tells them when to st op. The present approach is illustrated in this figure.

op. The present approach is illustrated in this figure.

The program checks for equilibrium by keeping track of the largest number of USS present in any previous cycle. If a sufficiently long time elapses without this number being exceeded, the run is treated as being at equilibrium and the long-range average number of USS calculated. So the dotted red lines in the figure illustrate four 'local maximum' USS numbers, and the time elapsed before that number was exceeded.

So how long is 'sufficiently long'? Because different settings affect whether it takes 1000 or 1,000,000 cycles to get to equilibrium, 'sufficiently long' is set as a percent of the total number of elapsed cycles. In the past I think I've used 20%, but I don't have any special reason to think this is the best choice. Beginning by doing lots of fast simulations should give us a much better understanding of what matters here.

We do expect that each run of the simulation will come to an equilibrium where the processes enriching the genome for USS are balanced by the processes removing USS from the genome. The difficulty comes in deciding how we will recognize that the simulation has reached this state. Because these are 'stochastic' simulations, the numbers of USS undergo a lot of random fluctuation even at equilibrium, and we need to decide when the long-term average has become sufficiently stable to qualify as 'equilibrium'. When the simulation first starts, the number of USSs increases quickly, but as it gets closer to equilibrium the rate of increase gets slower and slower.

How do we decide when to stop? As with many other things, how we decide to do this will depend on how long we're willing to wait, and on how accurate we want our answers to be.

Some versions of the simulations run fast; these are ones we've set to use small 'genomes' (e.g. 10,000bp) and high mutation rates and high uptake probabilities and (probably strong biases. We can easily let these run for many cycles beyond what looks like equilibrium, so that we're sure that the long-term average USS number is stable despite the short-term fluctuations. I think we should do lots of these, so we have a very clear expectation of what to expect.

But the more realistic versions of the simulations will run much slower, as they'll have larger genomes (maybe even as big as the H. influenzae genome, ~1,830,000bp) and lower mutation rates. These will be run remotely on the WestGrid system, and we need to build into them some criteria that tells them when to st

op. The present approach is illustrated in this figure.

op. The present approach is illustrated in this figure.The program checks for equilibrium by keeping track of the largest number of USS present in any previous cycle. If a sufficiently long time elapses without this number being exceeded, the run is treated as being at equilibrium and the long-range average number of USS calculated. So the dotted red lines in the figure illustrate four 'local maximum' USS numbers, and the time elapsed before that number was exceeded.

So how long is 'sufficiently long'? Because different settings affect whether it takes 1000 or 1,000,000 cycles to get to equilibrium, 'sufficiently long' is set as a percent of the total number of elapsed cycles. In the past I think I've used 20%, but I don't have any special reason to think this is the best choice. Beginning by doing lots of fast simulations should give us a much better understanding of what matters here.

Starting the unbiased look at genome USSs

I'm drafting a manuscript that will compare the sequence preference of the H. influenzae DNA uptake machinery to the consensus sequence of the USS-related repeats in the H. influenzae genome. The previous posts (Reanalysis of old uptake data and New wine in old bottles) were about assembling old uptake-preference data. This post is about work towards an unbiased consensus of the USS-related repeats in the genome.

As I discussed at the end of Representation matters, our present understanding of the USS-like repeats comes from analyses that were intrinsically biased. For example, the analyses presented in our USS-2006 manuscript (see link in sidebar) began with the assumption that the USS would be best defined by a unbroken string of approximately 9 bases. However some of these analyses led to consensus sequences where the flanking AT-rich segments had nearly as strong a consensus as the core we were searching for, leading me to suspect that, if we had instead searched for the flanking consensus, we might have gotten a different but equally valid (or equally invalid) USS consensus. (I'm not happy with this description of the problem, but I'll continue anyway.)

So my goal is to use a motif-search program to search the genome for highly-repeated patterns, starting the searches with as few assumptions as possible, and varying those assumptions to include other possibilities. Several such programs are available on-line; one of the grad students has quite a bit of experience with them. Usually you can just paste the sequences you want analyzed into a web form, click a few boxes, and the analysis appears on the screen (or is sent to your in-box). My kind of bioinformatics.

The grad student advises using the Gibbs Motif Sampler, which is available in simplified form through RSA-Tools and in more sophisticated form through the Wadsworth Institute. The FASTA problem is solved (thanks to helpful readers), so I first tried just giving both versions the first 25000bp of the genome sequence, in five 5000bp sequences, and asking them to find one 9bp motif. The Gibbs analysis is built on Bayesian probabilities, so it asks you to specify a 'prior' expected number of occurrences of the motif. (I'm glad that I now understand a bit about what Bayesian means, if not how to do it - see posts early this month.)

The results come back in various forms; tables of frequencies of each base at each position, and an alignment of the occurrences of the motif it found. The best way to visualize the results is to hand then alignment over to the sequence logo program Weblogo (see Representation matters).

Here are a couple of examples of the logos. The one on the left comes from a search for a 10bp motif, and the one on the right from a search for a 30bp motif.

The first thing I learned (of course) was that this analysis wasn't going to be as simple as I'd hoped.

I already know that the long version of the USS consensus is a bit 'palindromic', because Ham Smith pointed this out when he discovered the long version in 1995. ...Brief interruption while I looked for a decent explanation of 'DNA palindrome' on-line, found nothing, and then drew the accompanying illustration. These base-paired DNA sequences are palindromic. In each, the sequence of the upper strand, read from its 5' end to its 3' end (red arrows) is the same as the sequence of the lower strand, read from its 5' end to its 3' end (blue arrows). (The analogy is to a text palindrome like "Madam, I'm Adam".

sequence of the lower strand, read from its 5' end to its 3' end (blue arrows). (The analogy is to a text palindrome like "Madam, I'm Adam".

The USS consensus is far from being a perfect palindrome, but looking at the logo of the long version above shows that there are AAAs at the left end, TTTs at the right end, and a TTTAAA bit in the middle. For the Gibbs motif searches, this is enough that, if the expected number of occurrences is high (i.e. the search stringency is low), it aligns "+"-orientation USSs to "-"-orientation ones.

This only makes sense when we remember that the USS core is not at all palindromic, so on one strand it reads 5'-AAGTGCGGT and on the other strand it reads 5'-TTCACGCCA. About half the USSs in the genome point in one direction (e.g. clockwise around the genome, we can call this "+") and the other half point the other way ("-").

The tendency of Gibbs to align some of them back-to-front can be reduced (maybe even eliminated) by setting the expected number of occurrences quite low (no higher than the number of perfect USS cores we would really expect). This doesn't prevent the Gibbs program from finding more than this number. I need to think more about whether this does prevent it from finding occurrences that match quite imperfectly - my difficulty is that my own brain has a strong tendency to think mainly about the USS core.

I think this is how science is supposed to work - a kind of dialectic between reality and our biases. We start each analysis with expectations that don't match reality, then our biased analysis produces results that, although still wrong, are nevertheless closer to reality than the expectations we started with. We then take these modified expectations into the next analysis, and again get results that are a bit closer to reality. We never perfectly comprehend reality, but with good science we get closer and closer.

As I discussed at the end of Representation matters, our present understanding of the USS-like repeats comes from analyses that were intrinsically biased. For example, the analyses presented in our USS-2006 manuscript (see link in sidebar) began with the assumption that the USS would be best defined by a unbroken string of approximately 9 bases. However some of these analyses led to consensus sequences where the flanking AT-rich segments had nearly as strong a consensus as the core we were searching for, leading me to suspect that, if we had instead searched for the flanking consensus, we might have gotten a different but equally valid (or equally invalid) USS consensus. (I'm not happy with this description of the problem, but I'll continue anyway.)

So my goal is to use a motif-search program to search the genome for highly-repeated patterns, starting the searches with as few assumptions as possible, and varying those assumptions to include other possibilities. Several such programs are available on-line; one of the grad students has quite a bit of experience with them. Usually you can just paste the sequences you want analyzed into a web form, click a few boxes, and the analysis appears on the screen (or is sent to your in-box). My kind of bioinformatics.

The grad student advises using the Gibbs Motif Sampler, which is available in simplified form through RSA-Tools and in more sophisticated form through the Wadsworth Institute. The FASTA problem is solved (thanks to helpful readers), so I first tried just giving both versions the first 25000bp of the genome sequence, in five 5000bp sequences, and asking them to find one 9bp motif. The Gibbs analysis is built on Bayesian probabilities, so it asks you to specify a 'prior' expected number of occurrences of the motif. (I'm glad that I now understand a bit about what Bayesian means, if not how to do it - see posts early this month.)

The results come back in various forms; tables of frequencies of each base at each position, and an alignment of the occurrences of the motif it found. The best way to visualize the results is to hand then alignment over to the sequence logo program Weblogo (see Representation matters).

Here are a couple of examples of the logos. The one on the left comes from a search for a 10bp motif, and the one on the right from a search for a 30bp motif.

The first thing I learned (of course) was that this analysis wasn't going to be as simple as I'd hoped.

I already know that the long version of the USS consensus is a bit 'palindromic', because Ham Smith pointed this out when he discovered the long version in 1995. ...Brief interruption while I looked for a decent explanation of 'DNA palindrome' on-line, found nothing, and then drew the accompanying illustration. These base-paired DNA sequences are palindromic. In each, the sequence of the upper strand, read from its 5' end to its 3' end (red arrows) is the same as the

sequence of the lower strand, read from its 5' end to its 3' end (blue arrows). (The analogy is to a text palindrome like "Madam, I'm Adam".

sequence of the lower strand, read from its 5' end to its 3' end (blue arrows). (The analogy is to a text palindrome like "Madam, I'm Adam".The USS consensus is far from being a perfect palindrome, but looking at the logo of the long version above shows that there are AAAs at the left end, TTTs at the right end, and a TTTAAA bit in the middle. For the Gibbs motif searches, this is enough that, if the expected number of occurrences is high (i.e. the search stringency is low), it aligns "+"-orientation USSs to "-"-orientation ones.

This only makes sense when we remember that the USS core is not at all palindromic, so on one strand it reads 5'-AAGTGCGGT and on the other strand it reads 5'-TTCACGCCA. About half the USSs in the genome point in one direction (e.g. clockwise around the genome, we can call this "+") and the other half point the other way ("-").

The tendency of Gibbs to align some of them back-to-front can be reduced (maybe even eliminated) by setting the expected number of occurrences quite low (no higher than the number of perfect USS cores we would really expect). This doesn't prevent the Gibbs program from finding more than this number. I need to think more about whether this does prevent it from finding occurrences that match quite imperfectly - my difficulty is that my own brain has a strong tendency to think mainly about the USS core.

I think this is how science is supposed to work - a kind of dialectic between reality and our biases. We start each analysis with expectations that don't match reality, then our biased analysis produces results that, although still wrong, are nevertheless closer to reality than the expectations we started with. We then take these modified expectations into the next analysis, and again get results that are a bit closer to reality. We never perfectly comprehend reality, but with good science we get closer and closer.

Reanalysis of old uptake data

I've started reanalyzing the old DNA-uptake data (see New bottles for old wine). Yesterday I succeeded in using the Gibbs motif-search software (thank you RSA Tools!) to analyze the sequences from the 1990 paper, and was encouraged when it did find a USS motif in 15 of the 28 sequences. These 15 were fragments that cells had strongly preferred to take up, and the USS motif looks very much like the one derived previously from the whole-genome consensus. This result is very preliminary (I haven't yet kept any notes or done it meticulously), but it suggests that the bias of the uptake machinery does correspond well to the consensus of the genome repeats.

Today I did the preliminary analysis (this time keeping notes) of the phage-derived sequences from one of the earlier papers (1984). These sequences had not been put into GenBank as a neat set, so I had to download the phage sequence and use a nice shareware DNA-analysis program (Sequence Analysis; thank you Will Gilbert!) to identify the sequences of the five short fragments I will analyze.

I still need to deal with an annoying format problem. The motif-search programs accept DNA sequences only in particular formats, of which the simplest is "FASTA". FASTA identifies comment lines by starting them with an ">", but for some reason these programs treat the text after my ">"s as sequence. Of course they choke, because the text contains non-sequence characters (i.e. not just A G C T and N). If I paste FASTA-format sequence in directly from GenBank there's no problem, so I think Word is doing something weird with the ">" character. I need to find a better text editor for Macs (maybe Mi). Unfortunately TextEdit has been 'improved' to the point where it can no longer handle plain text - it insists on saving all files as RTF or HTML.

Today I did the preliminary analysis (this time keeping notes) of the phage-derived sequences from one of the earlier papers (1984). These sequences had not been put into GenBank as a neat set, so I had to download the phage sequence and use a nice shareware DNA-analysis program (Sequence Analysis; thank you Will Gilbert!) to identify the sequences of the five short fragments I will analyze.

I still need to deal with an annoying format problem. The motif-search programs accept DNA sequences only in particular formats, of which the simplest is "FASTA". FASTA identifies comment lines by starting them with an ">", but for some reason these programs treat the text after my ">"s as sequence. Of course they choke, because the text contains non-sequence characters (i.e. not just A G C T and N). If I paste FASTA-format sequence in directly from GenBank there's no problem, so I think Word is doing something weird with the ">" character. I need to find a better text editor for Macs (maybe Mi). Unfortunately TextEdit has been 'improved' to the point where it can no longer handle plain text - it insists on saving all files as RTF or HTML.

The ligase puzzle

The old uptake-specificity papers I'm reading (to reanalyze their uptake data, see previous post) include gels showing pools of radioactively-labeled DNA fragments before and after being taken up by cells. In addition to the data I'm looking for, these gels remind me of a puzzle involving a gene for a ligase.

before | uptake

Usually the 'before' pool used in these gels contains end-labeled restriction fragments of a plasmid containing a H. influenzae DNA insert. All the bands are of equal intensity because there are the same number of molecules of each piece of the plasmid. The left lane of the gel figure shows such a 'before' sample. The labeled DNA is then mixed with competent cells, and after a few minutes the DNA that hasn't been taken up is washed away and/or degraded with DNaseI, and DNA is purified from the cells and run in a gel with the 'before' sample. (This 'uptake' DNA prep includes the cells' own DNA, but that's not radioactive so it doesn't show up on the gel.)

Usually the 'before' pool used in these gels contains end-labeled restriction fragments of a plasmid containing a H. influenzae DNA insert. All the bands are of equal intensity because there are the same number of molecules of each piece of the plasmid. The left lane of the gel figure shows such a 'before' sample. The labeled DNA is then mixed with competent cells, and after a few minutes the DNA that hasn't been taken up is washed away and/or degraded with DNaseI, and DNA is purified from the cells and run in a gel with the 'before' sample. (This 'uptake' DNA prep includes the cells' own DNA, but that's not radioactive so it doesn't show up on the gel.)

There are two kinds of differences between the before and after lanes. First, some of the 'before' bands are missing (or faint) in the 'uptake' lane. That's because these fragments lack USSs and so were taken up poorly or not at all. Second, faint new bands appear that weren't in the Before sample. These are due to the ligase puzzle.

The authors of the paper said that the new bands appeared because of a ligase enzyme that joins the ends of DNA fragments while they are in the periplasm (the space between the outer and inner membranes. Similar bands were seen in similar experiments in later papers from this lab. A ligase in the periplasm has also been invoked to explain the presence of joined-together DNA fragments recombined into chromosomal DNA.

But the whole idea of a periplasmic ligase seemed a bit odd, as what would a ligase do in the periplasm? There isn't normally any DNA there, and even during DNA uptake there's no obvious role for a ligase.

However, when we did a microarray analysis to identify the genes turned on when cells become competent (see link to CRE-2005 paper in sidebar), we found that one of the genes turned on encodes a ligase with a signal sequence that should target it to the periplasm. Unbeknownst to us, the enzyme had already been well-characterized by biochemists - it's a real ligase, but the ATP-dependent kind typical of some phages, rather than the NAD-dependent kind that acts in DNA replication and repair.

So not only were the early researchers correct in invoking a ligase in the periplasm, but this ligase is specifically turned on when cells are preparing to take up DNA. Consistent with such a role, VanWagoner knocked out the ligase gene and found that transformation was reduced about six-fold. However an undergraduate student in our lab spent last year trying unsuccessfully to see evidence of the ligase activity, and she could not replicate this six-fold reduction.

This ligase needs ATP as a source of energy for its ligation reaction. But as far as we can find out, there is no ATP in the periplasm. In fact, the periplasm contains phosphatases that would cut the 'P' off of any ATP that found its way into the periplasm. One solution would be to have the ligase arrive in the periplasm already loaded with ATP. This is consistent with how such enzymes act - they first form a covalent bond with ATP, and then look for DNA to act on. But I don't know if the machinery that transports enzymes into the periplasm could use a ligase that had already assembled with its ATP. Furthermore, such an 'enzyme' could only act once, and it's hard to imagine that taking up DNA is so important that each molecule is worth sacrificing a whole ligase for.

Bottom line: we still have no idea what role this ligase might play in DNA uptake. If the ligase was essential for DNA uptake, explaining what it accomplishes might be seen as a test of any proposed mechanism of DNA uptake. It's easier to think of roles in the cytoplasm, but all the evidence points to action in the periplasm.

before | uptake

Usually the 'before' pool used in these gels contains end-labeled restriction fragments of a plasmid containing a H. influenzae DNA insert. All the bands are of equal intensity because there are the same number of molecules of each piece of the plasmid. The left lane of the gel figure shows such a 'before' sample. The labeled DNA is then mixed with competent cells, and after a few minutes the DNA that hasn't been taken up is washed away and/or degraded with DNaseI, and DNA is purified from the cells and run in a gel with the 'before' sample. (This 'uptake' DNA prep includes the cells' own DNA, but that's not radioactive so it doesn't show up on the gel.)

Usually the 'before' pool used in these gels contains end-labeled restriction fragments of a plasmid containing a H. influenzae DNA insert. All the bands are of equal intensity because there are the same number of molecules of each piece of the plasmid. The left lane of the gel figure shows such a 'before' sample. The labeled DNA is then mixed with competent cells, and after a few minutes the DNA that hasn't been taken up is washed away and/or degraded with DNaseI, and DNA is purified from the cells and run in a gel with the 'before' sample. (This 'uptake' DNA prep includes the cells' own DNA, but that's not radioactive so it doesn't show up on the gel.)There are two kinds of differences between the before and after lanes. First, some of the 'before' bands are missing (or faint) in the 'uptake' lane. That's because these fragments lack USSs and so were taken up poorly or not at all. Second, faint new bands appear that weren't in the Before sample. These are due to the ligase puzzle.

The authors of the paper said that the new bands appeared because of a ligase enzyme that joins the ends of DNA fragments while they are in the periplasm (the space between the outer and inner membranes. Similar bands were seen in similar experiments in later papers from this lab. A ligase in the periplasm has also been invoked to explain the presence of joined-together DNA fragments recombined into chromosomal DNA.

But the whole idea of a periplasmic ligase seemed a bit odd, as what would a ligase do in the periplasm? There isn't normally any DNA there, and even during DNA uptake there's no obvious role for a ligase.

However, when we did a microarray analysis to identify the genes turned on when cells become competent (see link to CRE-2005 paper in sidebar), we found that one of the genes turned on encodes a ligase with a signal sequence that should target it to the periplasm. Unbeknownst to us, the enzyme had already been well-characterized by biochemists - it's a real ligase, but the ATP-dependent kind typical of some phages, rather than the NAD-dependent kind that acts in DNA replication and repair.

So not only were the early researchers correct in invoking a ligase in the periplasm, but this ligase is specifically turned on when cells are preparing to take up DNA. Consistent with such a role, VanWagoner knocked out the ligase gene and found that transformation was reduced about six-fold. However an undergraduate student in our lab spent last year trying unsuccessfully to see evidence of the ligase activity, and she could not replicate this six-fold reduction.

This ligase needs ATP as a source of energy for its ligation reaction. But as far as we can find out, there is no ATP in the periplasm. In fact, the periplasm contains phosphatases that would cut the 'P' off of any ATP that found its way into the periplasm. One solution would be to have the ligase arrive in the periplasm already loaded with ATP. This is consistent with how such enzymes act - they first form a covalent bond with ATP, and then look for DNA to act on. But I don't know if the machinery that transports enzymes into the periplasm could use a ligase that had already assembled with its ATP. Furthermore, such an 'enzyme' could only act once, and it's hard to imagine that taking up DNA is so important that each molecule is worth sacrificing a whole ligase for.

Bottom line: we still have no idea what role this ligase might play in DNA uptake. If the ligase was essential for DNA uptake, explaining what it accomplishes might be seen as a test of any proposed mechanism of DNA uptake. It's easier to think of roles in the cytoplasm, but all the evidence points to action in the periplasm.

New bottles for old wine

I need to decide how to reanalyze some old data.

In a previous post (Representation matters) I mentioned that I had assembled into a Word file some data from old publications by other labs. This file now contains the sequences of all the DNA fragments whose uptake by H. influenzae has been measured, and some indication of how well each fragment was taken up. I want to reanalyze this data to see if I can pull out more information than was available to the original experimenters. I think this might qualify as a 'meta-analysis' because the data comes from several independent studies; I'll say a bit more about meta-analysis at the end of this post.

I've played around with this data in the past, just to see if I could see any new patterns, but now it's time to get serious because I want to be able to include the analysis in a paper correlating the abundance of different USS variants in the genome with the preferences of the uptake machinery. First I should assemble all of the four papers and reread them carefully. One of them has disappeared and isn't available on-line - I may have to walk over to the library tomorrow to get a copy (how old-fashioned!).

The most detailed study (also the most recent - 1990) looked at 28 plasmids with inserts of short H. influenzae DNA fragments. The uptake scores were published, and the insert sequences are in GenBank. All of these plasmids were preferentially taken up over a plasmid with no insert. The uptake scores of 15 of these inserts were also measured after being cut away from the plasmid vector; in most but not all cases uptake of the fragment correlated well with uptake of the plasmid that contained it. And most but not all of the sequenced inserts contained sequences resembling the USS core.

The other papers are older (1980-84). The first reports the work that first identified the USS core sequence. They sequenced four fragments that were preferentially taken up, and found five copies of an 11bp sequence. But there are complications that make this work hard to compare to the 1990 results. First, the DNA fragments did not come from H. influenzae but from the related bacterium H. parainfluenzae. I don't think this should matter. Second, uptake was not quantitated, just scored as yes or no; the band intensities in their gel suggest these fragments are taken up at least 10-fold better than other (no USS) fragments. A later paper (1982) from the same lab examined uptake of two of these fragments more quantitatively, and also looked at fragments with synthetic USSs, using what was then very new technology. The paper gives more gels, and also relative uptake scores for some of the fragments they tested. The final paper also measured relative uptake of purified fragments, this time from the H. influenzae phage HP1.

The 1990 data could be further analyzed by using software to do an unbiased motif-search - does this find the USS pattern? This could be restricted to those fragments that were strongly taken up, or applied to all the fragments. And does how well a particular fragment is taken up correlate with its having a better or worse match to this USS motif?

I would like to be able to then add the results of the earlier work into this analysis, but it's complicated by not having comparable measures of uptake. I think I will have to make some inferences, based on info that is common to the different papers, such as the uptake of the 'negative' fragments. But these inferences will probably not be as solidly justified as I would like.

I think this kind of problem is common to meta-analysis generally. Meta-analysis takes results from different studies that are individually weak or flawed in different ways, and tries to infer a stronger conclusion than could be obtained from any one of the studies. But because the studies are different, approximations always have to be made so the variables analyzed can be made comparable.

In a previous post (Representation matters) I mentioned that I had assembled into a Word file some data from old publications by other labs. This file now contains the sequences of all the DNA fragments whose uptake by H. influenzae has been measured, and some indication of how well each fragment was taken up. I want to reanalyze this data to see if I can pull out more information than was available to the original experimenters. I think this might qualify as a 'meta-analysis' because the data comes from several independent studies; I'll say a bit more about meta-analysis at the end of this post.

I've played around with this data in the past, just to see if I could see any new patterns, but now it's time to get serious because I want to be able to include the analysis in a paper correlating the abundance of different USS variants in the genome with the preferences of the uptake machinery. First I should assemble all of the four papers and reread them carefully. One of them has disappeared and isn't available on-line - I may have to walk over to the library tomorrow to get a copy (how old-fashioned!).

The most detailed study (also the most recent - 1990) looked at 28 plasmids with inserts of short H. influenzae DNA fragments. The uptake scores were published, and the insert sequences are in GenBank. All of these plasmids were preferentially taken up over a plasmid with no insert. The uptake scores of 15 of these inserts were also measured after being cut away from the plasmid vector; in most but not all cases uptake of the fragment correlated well with uptake of the plasmid that contained it. And most but not all of the sequenced inserts contained sequences resembling the USS core.

The other papers are older (1980-84). The first reports the work that first identified the USS core sequence. They sequenced four fragments that were preferentially taken up, and found five copies of an 11bp sequence. But there are complications that make this work hard to compare to the 1990 results. First, the DNA fragments did not come from H. influenzae but from the related bacterium H. parainfluenzae. I don't think this should matter. Second, uptake was not quantitated, just scored as yes or no; the band intensities in their gel suggest these fragments are taken up at least 10-fold better than other (no USS) fragments. A later paper (1982) from the same lab examined uptake of two of these fragments more quantitatively, and also looked at fragments with synthetic USSs, using what was then very new technology. The paper gives more gels, and also relative uptake scores for some of the fragments they tested. The final paper also measured relative uptake of purified fragments, this time from the H. influenzae phage HP1.

The 1990 data could be further analyzed by using software to do an unbiased motif-search - does this find the USS pattern? This could be restricted to those fragments that were strongly taken up, or applied to all the fragments. And does how well a particular fragment is taken up correlate with its having a better or worse match to this USS motif?

I would like to be able to then add the results of the earlier work into this analysis, but it's complicated by not having comparable measures of uptake. I think I will have to make some inferences, based on info that is common to the different papers, such as the uptake of the 'negative' fragments. But these inferences will probably not be as solidly justified as I would like.

I think this kind of problem is common to meta-analysis generally. Meta-analysis takes results from different studies that are individually weak or flawed in different ways, and tries to infer a stronger conclusion than could be obtained from any one of the studies. But because the studies are different, approximations always have to be made so the variables analyzed can be made comparable.

Yet another USS representation

I'm working on one more way to represent a USS consensus: positioning it on a DNA double helix.

This sketch shows the relationships between the USS positions on a real double helix. Unfortunately it doesn't indicate the relative strengths of the different positions' consensus (as the logo does). And I haven't yet taken the time (or found an easy way) to show which positions are at the back and which are at the front as they wrap around the helix. If you look closely you'll see that the pink and blue strands of the double helix are drawn to coil around each other, but the some of letters get in the way of seeing which strand is in front.

This sketch shows the relationships between the USS positions on a real double helix. Unfortunately it doesn't indicate the relative strengths of the different positions' consensus (as the logo does). And I haven't yet taken the time (or found an easy way) to show which positions are at the back and which are at the front as they wrap around the helix. If you look closely you'll see that the pink and blue strands of the double helix are drawn to coil around each other, but the some of letters get in the way of seeing which strand is in front.

When it's drawn properly it will show that the first part of the USS core (the AAGTG) is on the same side of the double helix as the two AT-rich flanking segments. The significance of this will (I hope) be clear once we understand how the USS functions in DNA uptake.

This sketch shows the relationships between the USS positions on a real double helix. Unfortunately it doesn't indicate the relative strengths of the different positions' consensus (as the logo does). And I haven't yet taken the time (or found an easy way) to show which positions are at the back and which are at the front as they wrap around the helix. If you look closely you'll see that the pink and blue strands of the double helix are drawn to coil around each other, but the some of letters get in the way of seeing which strand is in front.

This sketch shows the relationships between the USS positions on a real double helix. Unfortunately it doesn't indicate the relative strengths of the different positions' consensus (as the logo does). And I haven't yet taken the time (or found an easy way) to show which positions are at the back and which are at the front as they wrap around the helix. If you look closely you'll see that the pink and blue strands of the double helix are drawn to coil around each other, but the some of letters get in the way of seeing which strand is in front.When it's drawn properly it will show that the first part of the USS core (the AAGTG) is on the same side of the double helix as the two AT-rich flanking segments. The significance of this will (I hope) be clear once we understand how the USS functions in DNA uptake.

Representation matters

This is the post about how we represent our information about USS sequences.

There are really two issues: (1) what information do we have about how the sequences of DNA fragments affect which fragments competent H. influenzae cells take up?; and (2) how should we represent that information?. (Hmm, some confusion about punctuation here...)

(1) What information do we have about uptake specificity?

As I wrote yesterday, the information comes mainly from two kinds of analysis, uptake bias and genome abundance. Each kind of analysis produces a set of sequences whose similarities can be examined and represented in various ways.

Uptake bias experiments directly measure how many molecules of different DNA fragments are taken up by competent cells, and compares the sequences of these fragments. The papers describing these experiments appeared at least 15 years ago, and the experiments had various weaknesses. One of our postdocs is presently doing a more detailed analysis under more controlled conditions (see my Importance of USS positions post, and the USS-R-US blog). The original experiments used natural fragments of the H. influenzae genome; these were followed by experiments using fragments containing synthetic USS cores and using fragments from the genome of the H. influenzae phage HPi.

These experiments are fussy and time-consuming, so only a small number of fragments have ever been analyzed; I've assembled the results into a single Word file for comparison. They are sufficient to establish that fragments containing the 9bp sequence AAGTGCGGT (or sequences very similar to this) are taken up much more efficiently than fragments with very different sequences. The results also suggest that fragments with AT-rich sequences flanking this 'core' USS are taken up better than those with GC-rich flanking sequences.

This information was represented simply by the core consensus AAGTGCGGT, and most subsequent analyses have focused on this repeat. However this simple representation fails to capture the complexity of even what was known 20 years ago, and the focus on it has not been very helpful.

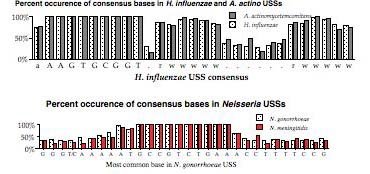

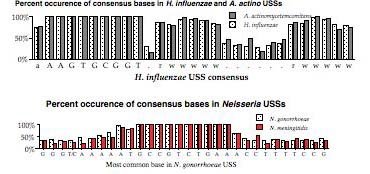

Things changed as soon as the H. influenzae genome sequence became available. Now it was possible to do the second kind of analysis, examining the abundances of different USS-related repeats in the genome (see Smith et al. 1995 Science 269:538). These repeats are thought to probably reflect the biases of the DNA uptake machinery - we are testing this assumption.

The above representation is taken from Smith et al. They searched the genome for every copy of AAGTGCGGT (in either orientation), and aligned the 1465 sequences this gave. The figure summarizes this alignment by showing the frequency of each of the four bases at each of 50 positions centered on the USS core. It gives no information about the core itself, but shows that some of the sequences to the right of the core have clear AT-rich consensuses (indicated by 'r' or 'w').

To get some information about the core iteslf, Smith et al repeated this analysis using 50bp segments contianing imperfect ("one-off") USSs. This analysis showed that some positions in the core have a stronger consensus than others. But the genome contains only about half as many one-off USSs as perfect USSs (~, and these are divided among 27 different ways to vary, so the effective sample size was quite a bit smaller than for the perfect USSs.

Since then we have done our own analyses on various genomes (see links to the USS-2006 and USS-2004 papers).

Our first alternative representation was a bar chart summarizing the strength of the consensus at each position. This was used for a NIH grant proposal, and later published in a paper with Wang that's not in the link list yet.

But lately we've been using a different representation scheme - "sequence logos". The multicoloured logo shown below is based on the same information as the table from the Smith et al. paper. Each stack of letters (or absence of stack) represents one of the 50 positions, and the height of each stack indicates how strongly the proportions of the bases differs from that expected of a random sequence. The size of each letter in the stack indicates its frequency at that position. By giving a visual representation of the proportions of each base at each position, it displays the alternatives at each position and the strength of the consensus much more directly than the Smith et al. table.

One remaining weakness is that the sequences used to generate the logo (or the Smith et al. tables) require starting with a specific sequence or set of sequences (e.g. perfect USS or set of one-off USSs). This means that the result will inevitably overrepresent whatever was searched for, and may underrepresent the strength of consensus at flanking sequences.

In the USS-2006 paper we made a small attempt to compensate for this, by also searching the A. pleuropneumoniae genome for some of the flanking consensuses. But what we really need to do is an 'unbiased' search of the whole genome for overrepresented sequence motifs. A grad student is familiar with programs that do this; he's been using them to search for the CRP-S promoter motifs in various genomes. But I don't know how easy it would be to modify them to search a genome.

Another remaining weakness is that the various consensus analyses tell us nothing about any tendency for the variation at any one position to be correlated with (??) the variation at any other position. For example, one comment to yesterday's post explained that the sequence TATA is much more flexible than other tetramers. But the consensus representations encourage us to think only of strings of AATTTTs and ATTTTT, and not to consider how many USS might have TATA in their flanking sequences.

There are really two issues: (1) what information do we have about how the sequences of DNA fragments affect which fragments competent H. influenzae cells take up?; and (2) how should we represent that information?. (Hmm, some confusion about punctuation here...)

(1) What information do we have about uptake specificity?

As I wrote yesterday, the information comes mainly from two kinds of analysis, uptake bias and genome abundance. Each kind of analysis produces a set of sequences whose similarities can be examined and represented in various ways.

Uptake bias experiments directly measure how many molecules of different DNA fragments are taken up by competent cells, and compares the sequences of these fragments. The papers describing these experiments appeared at least 15 years ago, and the experiments had various weaknesses. One of our postdocs is presently doing a more detailed analysis under more controlled conditions (see my Importance of USS positions post, and the USS-R-US blog). The original experiments used natural fragments of the H. influenzae genome; these were followed by experiments using fragments containing synthetic USS cores and using fragments from the genome of the H. influenzae phage HPi.

These experiments are fussy and time-consuming, so only a small number of fragments have ever been analyzed; I've assembled the results into a single Word file for comparison. They are sufficient to establish that fragments containing the 9bp sequence AAGTGCGGT (or sequences very similar to this) are taken up much more efficiently than fragments with very different sequences. The results also suggest that fragments with AT-rich sequences flanking this 'core' USS are taken up better than those with GC-rich flanking sequences.

This information was represented simply by the core consensus AAGTGCGGT, and most subsequent analyses have focused on this repeat. However this simple representation fails to capture the complexity of even what was known 20 years ago, and the focus on it has not been very helpful.

Things changed as soon as the H. influenzae genome sequence became available. Now it was possible to do the second kind of analysis, examining the abundances of different USS-related repeats in the genome (see Smith et al. 1995 Science 269:538). These repeats are thought to probably reflect the biases of the DNA uptake machinery - we are testing this assumption.

The above representation is taken from Smith et al. They searched the genome for every copy of AAGTGCGGT (in either orientation), and aligned the 1465 sequences this gave. The figure summarizes this alignment by showing the frequency of each of the four bases at each of 50 positions centered on the USS core. It gives no information about the core itself, but shows that some of the sequences to the right of the core have clear AT-rich consensuses (indicated by 'r' or 'w').

To get some information about the core iteslf, Smith et al repeated this analysis using 50bp segments contianing imperfect ("one-off") USSs. This analysis showed that some positions in the core have a stronger consensus than others. But the genome contains only about half as many one-off USSs as perfect USSs (~, and these are divided among 27 different ways to vary, so the effective sample size was quite a bit smaller than for the perfect USSs.

Since then we have done our own analyses on various genomes (see links to the USS-2006 and USS-2004 papers).

Our first alternative representation was a bar chart summarizing the strength of the consensus at each position. This was used for a NIH grant proposal, and later published in a paper with Wang that's not in the link list yet.

But lately we've been using a different representation scheme - "sequence logos". The multicoloured logo shown below is based on the same information as the table from the Smith et al. paper. Each stack of letters (or absence of stack) represents one of the 50 positions, and the height of each stack indicates how strongly the proportions of the bases differs from that expected of a random sequence. The size of each letter in the stack indicates its frequency at that position. By giving a visual representation of the proportions of each base at each position, it displays the alternatives at each position and the strength of the consensus much more directly than the Smith et al. table.

One remaining weakness is that the sequences used to generate the logo (or the Smith et al. tables) require starting with a specific sequence or set of sequences (e.g. perfect USS or set of one-off USSs). This means that the result will inevitably overrepresent whatever was searched for, and may underrepresent the strength of consensus at flanking sequences.

In the USS-2006 paper we made a small attempt to compensate for this, by also searching the A. pleuropneumoniae genome for some of the flanking consensuses. But what we really need to do is an 'unbiased' search of the whole genome for overrepresented sequence motifs. A grad student is familiar with programs that do this; he's been using them to search for the CRP-S promoter motifs in various genomes. But I don't know how easy it would be to modify them to search a genome.

Another remaining weakness is that the various consensus analyses tell us nothing about any tendency for the variation at any one position to be correlated with (??) the variation at any other position. For example, one comment to yesterday's post explained that the sequence TATA is much more flexible than other tetramers. But the consensus representations encourage us to think only of strings of AATTTTs and ATTTTT, and not to consider how many USS might have TATA in their flanking sequences.

USS biochemistry

This post will be about how biochemical modifications of DNA can tell us how different positions of the DNA sequence affect uptake by H. influenzae cells.

I'm planning a separate post about how we represent USS consensus sequences, and about how much information we have about the consensus, but for now I'll just present the extended 'consensus' as AAGTGCGGTnnRRWWWWnnnnnnRWWWWW, where 'n' means any base, 'R' means purine (A or G), and 'W' means weak (A or T). But above I've succeeded in including a 'sequence logo' representation of the genome consensus.

This consensus is mainly based on two kinds of information. The first is sequences that are consistently present in DNA fragments that H. influenzae preferentially takes up. The second is the consensus of repeats in the H. influenzae genome sequence. The consensus is supported by "ethylation-interference" experiments that Ham Smith's lab did about 25 years ago (Danner et al. 1980 Gene 11:311). I'm considering doing (persuading someone else to do?) more of these experiments, and a similar type of experiment called "missing nucleotide" analysis.

Both kinds of experiment work the same way. A short DNA fragment containing a USS (or variant) is radioactively labeled with 32P at one end, and then subject to a chemical treatment that modifies some of the bases or the phosphates that connect the bases. Competent H. influenzae cells are then allowed to take up (try to take up) the labeled modified DNA. It's expected that some modifications will interfere with (or sometimes enhance) this uptake, so fragments that by chance have modifications that interfere won't be taken up, and fragments that have modifications that enhance will be taken up more efficiently than the rest.

The cells, along with whatever DNA fragments they've taken up, are then washed, and DNA is prepared from them. This DNA will be a mixture of the non -radioactive cell DNA (which we can ignore) and the radioactive DNA they took up. The DNA is then treated with a second chemical that breaks the strands at the sites of modification, heated to separate the two strands, and then run in a gel and exposed to film.

If none of the modifications affect DNA uptake, we expect to see a band for each position of the sequence, as each position was equally likely to be modified and each modification was equally likely to be taken up. (We control for these assumptions by separately running in the gel DNA that was broken without ever having been exposed to cells.) But if modifications at some positions prevent fragments from being taken up, then the bands corresponding to breakage at those positions will be missing from the gel. If some modifications increase uptake, their bands will be stronger than the control bands. Here's a link to a paper that used both techniques to analyze interactions between RNA polymerase and the lac UV5 promoter.

The analysis called "ethylation interference" uses treatment with the chemical ethylnitrosourea, which puts ethyl groups (CH3CH2-) onto the phosphates that connect the bases. It chooses the phosphates randomly, so every position is equally likely to be modified. But the extent of ethylation should be limited, so that on average each DNA fragment gets modified at only a single random position.

Ham Smith's lab did this, and found that ethylation at most of the phosphates in the core interfered with uptake. A few positions outside the core, and one in the core, enhanced uptake. At that time these results were used mainly to confirm that the putative USS sequence repeat did interact with the cell's uptake machinery. The authors also speculated that they revealed the positions important for binding. (Note added later: In the next post, Representation matters, I show a figure from Smith et al. 1995 summarizing their analysis of USS in the H. influenzae genome. The *** above and below their consensus sequence indicate the positions where ethylation changed uptake.)

But now I think we may be able to use this kind of analysis to find out more about what the DNA does during the uptake process. Specifically, I suspect that some of the modifications may affect uptake by making the USS easier or harder to bend or kink. I really need to find out how ethylation is expected to change DNA bendability in general - is it more or less likely to bind at the position that's ethylated?

The other kind of modification, used by "missing nucleoside analysis", treats the labeled fragments with hydroxyl radicals. The hydroxyls destroy the deoxyribose, removing a single nucleoside (base plus deoxyribose) from one or more more-or-less random positions in the DNA. This creates a one-base gap in the strand and two extra negative charges (from the exposed phosphates I guess). The double-stranded DNA remains intact except at the site of this gap in one strand. After the cells take up the DNA it doesn't need to be broken, just heated to separate the strands before being run in the gel.

Loss of the nucleoside has two effects. First, any protein side chains that normally interact with that nucleoside make no contact with the DNA; this is likely to weaken the DNA-protein interaction. Second, the missing nucleoside and the broken backbone are likely to make the DNA more flexible and more easily denatured at the site of the gap. I think this might make DNA uptake easier. So, applied to USSs, weaker bands might identify where the DNA contacts the USS-recognizing proteins, and stronger bands might identify sites where bending, kinking or strand separation are needed.

CRP-S manuscript submitted!

We decided to send it to Nucleic Acids Research, because this journal has a strong reputation and is read by everyone who works on CRP.

For us, getting a manuscript to the submission stage is the limiting step. We have lots of results that haven't made it across this hurdle.

Here's my first within-blog link: PDF of the CRP-S manuscript. I hope it works.

And I'll also try to put a semi-permanent link to this manuscript in the sidebar. "Semi-permanent" because I hope we'll soon be able to replace it with a link to the published version.

For us, getting a manuscript to the submission stage is the limiting step. We have lots of results that haven't made it across this hurdle.

Here's my first within-blog link: PDF of the CRP-S manuscript. I hope it works.

And I'll also try to put a semi-permanent link to this manuscript in the sidebar. "Semi-permanent" because I hope we'll soon be able to replace it with a link to the published version.

The computer model of USS evolution

Yesterday the postdocs and I spent some time thinking/talking about our computer model project. I have all sorts of assumptions that the postdocs don't, resulting from all the work I did last year on this, so a lot of time is spent discovering these, explaining them (which requires my rethinking them) and then deciding whether they should be kept or discarded for the present work.

I also worked on my draft (outline, really) of the manuscript describing this work, and got in in good enough shape that it could be given to the postdocs. They can use it to get a clearer idea (because in writing) of what I'm thinking, and thus as a framework to see ways our various ideas can be improved.

And I went through the code of some versions of the program from last summer. Programming (for me) is like experimental research in that I mostly have a series of goals, each resulting from rethinking the previous work and results, but do a lot of blundering around. Thus the various versions have different sub-goals and different flaws, some of which I made of and some of which I didn't. And they're in different degrees of completion - some I think I had set aside without finishing making changes...

But luckily I was very conscientious about annotating the code as I wrote it, so every line or group of lines has at least a few words of explanation. On rereading I found the overall structure of the programs (all very similar) to be less complex than I had remembered it. It was easy for me to bracket off the little clusters of commands and label them with what they were accomplishing. The details of how they accomplish it are not so clear, because I've forgotten how many of the commands work, especially the search and replace ones that are the heart of the program.

I think it's a very nice program. Our first big challenge is to get it to run to 'equilibrium'. That is, after enough generations under any specified settings of the mutation rate and DNA uptake probabilities, the simulated DNA sequence should get to a state where its properties are not changing. Because the simulated steps have a component of randomness, there will still be lots of changes from generation to generation, but when averaged over many generations there should be no net change. Our main scientific goal is to characterize the equilibria, and the approach to equilibria, over a wide range of biologically-relevant conditions.

But getting to and recognizing the equilibria is challenging for a couple of reasons. First, because of the randomness, it's not easy to decide on good criteria for recognizing equilibrium. I'll post more on this another time. Second, getting to equilibrium ( or close enough to meet whatever criteria we set) can take a long time, and so we need to modify the model so we can run it on a fast computer 'grid' we have access to.

I also worked on my draft (outline, really) of the manuscript describing this work, and got in in good enough shape that it could be given to the postdocs. They can use it to get a clearer idea (because in writing) of what I'm thinking, and thus as a framework to see ways our various ideas can be improved.

And I went through the code of some versions of the program from last summer. Programming (for me) is like experimental research in that I mostly have a series of goals, each resulting from rethinking the previous work and results, but do a lot of blundering around. Thus the various versions have different sub-goals and different flaws, some of which I made of and some of which I didn't. And they're in different degrees of completion - some I think I had set aside without finishing making changes...

But luckily I was very conscientious about annotating the code as I wrote it, so every line or group of lines has at least a few words of explanation. On rereading I found the overall structure of the programs (all very similar) to be less complex than I had remembered it. It was easy for me to bracket off the little clusters of commands and label them with what they were accomplishing. The details of how they accomplish it are not so clear, because I've forgotten how many of the commands work, especially the search and replace ones that are the heart of the program.

I think it's a very nice program. Our first big challenge is to get it to run to 'equilibrium'. That is, after enough generations under any specified settings of the mutation rate and DNA uptake probabilities, the simulated DNA sequence should get to a state where its properties are not changing. Because the simulated steps have a component of randomness, there will still be lots of changes from generation to generation, but when averaged over many generations there should be no net change. Our main scientific goal is to characterize the equilibria, and the approach to equilibria, over a wide range of biologically-relevant conditions.

But getting to and recognizing the equilibria is challenging for a couple of reasons. First, because of the randomness, it's not easy to decide on good criteria for recognizing equilibrium. I'll post more on this another time. Second, getting to equilibrium ( or close enough to meet whatever criteria we set) can take a long time, and so we need to modify the model so we can run it on a fast computer 'grid' we have access to.

How DNA functions constrain USS locations

One question about the accumulation of USS sequences in genomes is the extent that they interfere with coding for proteins and other 'real' functions of the DNA. I've calculates that USS constrain 2-3% of the H. influenzae genome, taking into account the two flanking segments and also the strength of the consensus at these places. That was done years ago, and I probably should redo the calculation, especially as I'm not sure I can even find the original notes.

Seven or eight years ago we started working on this in collaboration with a theoretical physicist (turned bioinformatician) in Taiwan. One of his grad students did extensive analysis but has since gone on to other things, and his supervisor says we're free to finish the analysis and publish it without including them. So I've arranged with our current bioinformatics collaborators to redo the analysis, incorporating various improvements made possible by both our improved understanding of the issues and by the availability of more sequences to analyze.

This is a nice change from most of our work, in that we are starting out with a very good idea of what the results will look like. Not the details, but the general shape of things. I took advantage of this to write much of the paper in advance of getting the results the paper will describe. I made fake figures showing what the data will probably look like, and considering different ways we might present it. And then I sent the whole draft paper off to the collaborators, so they could see where their work is going. And I'm sitting back waiting for them to do the heavy lifting of generating the data.

What are the main findings? We already know that in H. influenzae and Neisseria meningitidis the USSs are preferentially found in the non-coding regions (these is only about 10% of the genome). In H. influenzae about 35% of USS are in non-coding, and in N. meningitidis about 60%. We'll check the ratios for other genomes too. We assume (hypothesize?) that this is because USSs constrain the ability of genes to code for the best amino acids.

The big analysis is done on the USSs that ARE in the coding regions, because here we can determine true sequence homology with other bacteria. We can thus use sequence alignments to find out the degree to which USSs avoid the most highly conserved (= most functionally constrained) parts of proteins. The result is that USSs are preferentially found in two kinds of places. The first is parts of proteins that show little evidence of functional constraint - for example the flexible hinges and linkers between domains. The second is parts of proteins where USSs don't change the amino acids; i.e. where the USS specifies the same amino acids that are optimal anyway. We can also analyze these USSs by the kind of proteins (or RNAs) the different genes produce - USSs are preferentially found in the less important proteins. And we can check whether the protein-coding part of the genome has spare places where USSs could be put without changing the amino acid sequence of the protein. H. influenzae has quite a few of these (I forget the numbers).

Hmm, writing this overview is giving me better ideas of how the paper should be organized.

Seven or eight years ago we started working on this in collaboration with a theoretical physicist (turned bioinformatician) in Taiwan. One of his grad students did extensive analysis but has since gone on to other things, and his supervisor says we're free to finish the analysis and publish it without including them. So I've arranged with our current bioinformatics collaborators to redo the analysis, incorporating various improvements made possible by both our improved understanding of the issues and by the availability of more sequences to analyze.

This is a nice change from most of our work, in that we are starting out with a very good idea of what the results will look like. Not the details, but the general shape of things. I took advantage of this to write much of the paper in advance of getting the results the paper will describe. I made fake figures showing what the data will probably look like, and considering different ways we might present it. And then I sent the whole draft paper off to the collaborators, so they could see where their work is going. And I'm sitting back waiting for them to do the heavy lifting of generating the data.

What are the main findings? We already know that in H. influenzae and Neisseria meningitidis the USSs are preferentially found in the non-coding regions (these is only about 10% of the genome). In H. influenzae about 35% of USS are in non-coding, and in N. meningitidis about 60%. We'll check the ratios for other genomes too. We assume (hypothesize?) that this is because USSs constrain the ability of genes to code for the best amino acids.